netquirks

Exploring the quirks of Network Engineering

The Label Switched Path Not Taken

With the increased introduction of Segment Routing as the label distribution method used by Service Providers, there will inevitably be clashes with the tried and true LDP protocol. Indeed, interoperation between SR and LDP is one of the most important features to consider when introducing SR into a network. But what happen if there is no SR-LDP interoperation to be had? This is definitely the case for IPv6, since LDP for IPv6 is, more often than not, non-existent. This is exactly what this quirk will explore. More specifically, it explores how two different vendors, namely Cisco and Arista, tackle an LSP problem involving native IPv6 MPLS2IP forwarding.

I’ll begin by showing the topology and then give an example of how basic IPv4 SR to LDP interoperation works. We’ll then look at a similar scenario using IPv6 and explore how each vendor behaves. There is no “right answer” to this situation, as neither vendor violates any RFC (at least none that I can find), but it is an interesting exploration of how each approach the same problem.

Setup

I’ll start with a disclaimer, in that this quirk applies to the following software versions in a lab environment:

- Cisco IOS-XR 6.5.3

- Arista 4.25.2F

There is nothing I have seen in either Release Notes, or real world deployments that would make me think that the behaviour described here wouldn’t be the same on the latest releases – but it’s worth keeping in mind. With that said, let’s look at the setup…

The topology we will look at is as follows:

An EVE-NG lab and the base configs can be downloaded here:

The EVE lab has all interfaces unshut. The goal here is for the CE subnets to reach each other. To accomplish this R1 and R6 will run BGP sessions between their loopbacks. In this state, IPv6 forwarding will be broken – but we’ll explore that as we go!

I’ve made the network fairly straightforward to allow us to focus on the quirk. Every device except for R5 runs SR with point-to-point L2 ISIS as the underlaying IGP. The SRGB base is 17000 on all devices. The IPv4 Node SID for each router is its router number. The IPv6 Node SID is the router number plus 600. R5 is an LDP only node and as such, will need a mapping server to advertise it’s node-SID throughout the network – R6 fulfils this role.

To explore the quirk, we will look at forwarding from R1 to R6, loopback-to-loopback. Notice that R3 (a Cisco) and R4 (an Arista) sit on the SR/LDP boundary. IPv4 will be looked at first, to help explain the interoperation between SR and LDP. Once that is done, I’ll demonstrate how each vendor handles IPv6 forwarding differently, which results in forwarding problems.

For now we won’t look at the PE-CE BGP sessions since without the iBGP sessions, our core control plan is broken.

To get the lay of the land let’s check the config on R6 (our destination) and make sure R1’s LFIB is configured correctly.

RP/0/RP0/CPU0:R6#sh run router isis

Wed Nov 23 00:04:51.370 UTC

router isis LAB

is-type level-2-only

net 49.0100.1111.1111.0006.00

log adjacency changes

address-family ipv4 unicast

metric-style wide

advertise passive-only

segment-routing mpls sr-prefer

segment-routing prefix-sid-map advertise-local

!

address-family ipv6 unicast

metric-style wide

advertise passive-only

segment-routing mpls sr-prefer

!

interface Loopback0

passive

address-family ipv4 unicast

prefix-sid absolute 17006

!

address-family ipv6 unicast

prefix-sid absolute 17606

!

!

interface GigabitEthernet0/0/0/3

point-to-point

address-family ipv4 unicast

!

address-family ipv6 unicast

!

!

!

RP/0/RP0/CPU0:R6#sh run segment-routing

Wed Nov 23 00:05:13.681 UTC

segment-routing

global-block 17000 23999

mapping-server

prefix-sid-map

address-family ipv4

10.1.1.5/32 5 range 1

!

!

!

!

RP/0/RP0/CPU0:R6#The same SRGB is configured on all devices. We can see that R6 has SID index 6 for its IPv4 loopback and index 606 for its IPv6 loopback.

With these two pieces of information, we’d expect the CEF table for R1 to use 17006 and 17606 to forward to R6s IPv4 and IPv6 loopbacks respectively….

RP/0/RP0/CPU0:R1#show cef ipv4 10.1.1.6/32

Wed Nov 23 00:11:57.523 UTC

10.1.1.6/32, version 35, labeled SR, internal 0x1000001 0x83 (ptr 0xde0be70) [1], 0x0 (0xdfcd3a8), 0xa28 (0xe4dc2e8)

Updated Nov 22 17:34:26.975

remote adjacency to GigabitEthernet0/0/0/0

Prefix Len 32, traffic index 0, precedence n/a, priority 1

via 10.1.2.2/32, GigabitEthernet0/0/0/0, 6 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0xecbd140 0x0]

next hop 10.1.2.2/32

remote adjacency

local label 17006 labels imposed {17006}

RP/0/RP0/CPU0:R1#show cef ipv6 2001:1ab::6/128

Wed Nov 23 00:11:59.542 UTC

2001:1ab::6/128, version 32, labeled SR, internal 0x1000001 0x82 (ptr 0xe0db6ac) [1], 0x0 (0xe29a428), 0xa28 (0xe4dc268)

Updated Nov 22 17:34:26.976

remote adjacency to GigabitEthernet0/0/0/0

Prefix Len 128, traffic index 0, precedence n/a, priority 1

via fe80::5200:ff:fe03:3/128, GigabitEthernet0/0/0/0, 6 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0xd2656a0 0x0]

next hop fe80::5200:ff:fe03:3/128

remote adjacency

local label 17606 labels imposed {17606}

RP/0/RP0/CPU0:R1#So far so good. Let’s start with a full IPv4 traceroute and see how SR interoperates with LDP.

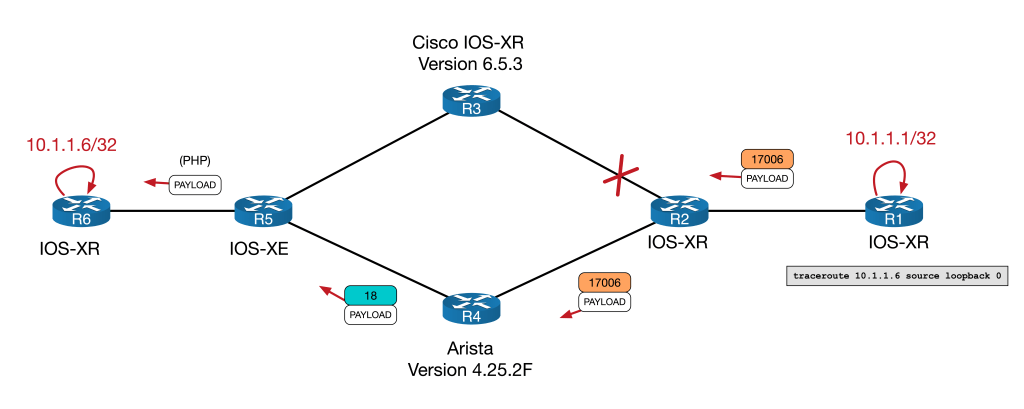

IPv4 connectivity and LDP interoperability

We’ll look at this by first examining Cisco’s behaviour, so let’s shutdown the R2 to R4 Arista link (Gi0/0/0/2)…

RP/0/RP0/CPU0:R2(config)#int GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#commitFrom here, we do a basic traceroute:

RP/0/RP0/CPU0:R1#traceroute 10.1.1.6 source lo0

Wed Nov 23 00:13:59.116 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.6

1 10.1.2.2 [MPLS: Label 17006 Exp 0] 152 msec 142 msec 135 msec

2 10.2.3.3 [MPLS: Label 17006 Exp 0] 142 msec 148 msec 146 msec

3 10.3.5.5 [MPLS: Label 18 Exp 0] 140 msec 137 msec 135 msec

4 10.5.6.6 148 msec * 126 msec

RP/0/RP0/CPU0:R1#

Here’s a visual diagram of what is happening:

Let’s look a bit closer at what is happening here. How does R3 program its LFIB? For segment routing the LFIB programming works like this:

- Local label: The Node SID + the SRGB Base (in our case 6 + 17000 = 17006)

- Outbound label: The Node SID + the SRGB Base of the next-hop for that prefix

Now if the next-hop was SR capable, and the SRGB was contiguous through the domain (e.g. it was 17000 everywhere), the outbound label would be 17006 as well. But here, R5 is not SR capable. It isn’t advertising any SR TLV information in its ISIS LDPUs. But it does have LDPs sessions with all of its neighbors, including R3.

R5#sh mpls ldp neighbor | inc Peer|Gig

Peer LDP Ident: 10.1.1.4:0; Local LDP Ident 10.1.1.5:0

GigabitEthernet1, Src IP addr: 10.4.5.4

Peer LDP Ident: 10.1.1.6:0; Local LDP Ident 10.1.1.5:0

GigabitEthernet3, Src IP addr: 10.5.6.6

Peer LDP Ident: 10.1.1.3:0; Local LDP Ident 10.1.1.5:0

GigabitEthernet2, Src IP addr: 10.3.5.3

R5#As you might be able to predict, this is where the SR to LDP interoperation comes into play. R5 will have advertised its local label for 10.1.1.6 to R3.

RP/0/RP0/CPU0:R3#sh mpls ldp ipv4 bindings 10.1.1.6/32

Wed Nov 23 00:14:23.126 UTC

10.1.1.6/32, rev 17

Local binding: label: 16300

Remote bindings: (1 peers)

Peer Label

----------------- ---------

10.1.1.5:0 18

RP/0/RP0/CPU0:R3#So R5 will use this instead! The basic principle is as follows:

Interworking is achieved by replacing an unknown outbound label from one protocol by a valid outgoing label from another protocol.

SR is basically “inheriting” from LDP. R3’s forwarding table looks as follows:

RP/0/RP0/CPU0:R3#sh mpls forwarding labels 17006

Wed Nov 23 00:26:02.364 UTC

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

17006 18 SR Pfx (idx 6) Gi0/0/0/2 10.3.5.5 3808

RP/0/RP0/CPU0:R3#The local label is the SR label and the outbound label is the LDP label of 18. You can see from the traceroute that this indeed the label used.

RP/0/RP0/CPU0:R1#traceroute 10.1.1.6 source lo0

Wed Nov 23 00:13:59.116 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.6

1 10.1.2.2 [MPLS: Label 17006 Exp 0] 152 msec 142 msec 135 msec

2 10.2.3.3 [MPLS: Label 17006 Exp 0] 142 msec 148 msec 146 msec

3 10.3.5.5 [MPLS: Label 18 Exp 0] 140 msec 137 msec 135 msec

4 10.5.6.6 148 msec * 126 msec

RP/0/RP0/CPU0:R1#NB. I’ve missed a couple of details here, namely how LDP to SR works in the opposite direction in conjunction with mapping server statements. These don’t directly relate to our quirk here, since we’re focusing on R1 to R6 traffic, but I’d recommend reading the Segment Routing Book Series found on segment-routing.net to get the full details of SR/LDP interoperability.

Now that we’ve verified forwarding through the Cisco, we’ll switch to the Arista path to ensure that the behaviour is identical. First we’ll shutdown R2’s uplink to R3 (Gi0/0/0/1) and unshut its uplink to R4 (Gi0/0/0/2), before rerunning the traceroute:

RP/0/RP0/CPU0:R2(config)#int GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#no shut

RP/0/RP0/CPU0:R2(config-if)#int GigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#commit

Wed Nov 23 00:40:25.104 UTC

LC/0/0/CPU0:Nov 23 00:40:25.219 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Down

LC/0/0/CPU0:Nov 23 00:40:25.318 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Up

RP/0/RP0/CPU0:R2(config-if)#

RP/0/RP0/CPU0:R1#traceroute 10.1.1.6 source lo0

Wed Nov 23 00:41:12.857 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.6

1 10.1.2.2 [MPLS: Label 17006 Exp 0] 57 msec 54 msec 56 msec

2 * * *

3 10.4.5.5 [MPLS: Label 18 Exp 0] 60 msec 53 msec 51 msec

4 10.5.6.6 60 msec * 62 msec

RP/0/RP0/CPU0:R1#Success! It’ using the LDP label 18 just as before and if we run some of the Arista CLI commands we see similar inheritance behaviour to the Cisco:

R4#show mpls lfib route | begin 10.1.1.6/32

IL 17006 [1], 10.1.1.6/32

via M, 10.4.5.5, swap 18

payload autoDecide, ttlMode uniform, apply egress-acl

interface Ethernet1

<<snip>>Here’s the diagram:

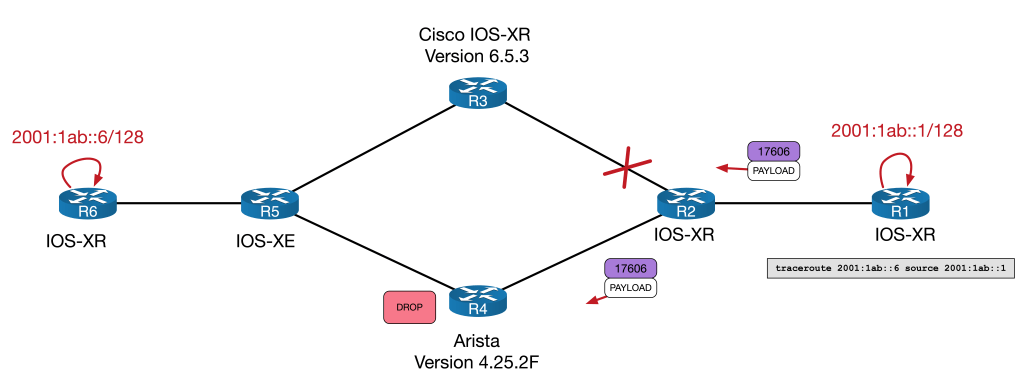

So IPv4 looks solid. If no SR, then fall back to LDP. But what happens if we use IPv6? There is no LDP for IPv6. More importantly though… what should happen? Let’s explore what both vendors do and then you can make up your own mind.

IPv6 Connectivity and LDP

Let’s flip back to Cisco and see what the traceroute looks like:

RP/0/RP0/CPU0:R2(config-if)#int GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#int GigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R2(config-if)#no shut

RP/0/RP0/CPU0:R2(config-if)#commit

Wed Nov 23 00:49:08.711 UTC

LC/0/0/CPU0:Nov 23 00:49:08.787 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/1, changed state to Down

LC/0/0/CPU0:Nov 23 00:49:08.837 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/1, changed state to Up

RP/0/RP0/CPU0:R2(config-if)#

RP/0/RP0/CPU0:R1#traceroute ipv6 2001:1ab::6 source lo0

Wed Nov 23 00:50:51.770 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 2001:1ab:1:2::2 [MPLS: Label 17606 Exp 0] 261 msec 48 msec 47 msec

2 2001:1ab:2:3::3 [MPLS: Label 17606 Exp 0] 52 msec 33 msec 46 msec

3 2001:1ab:3:5::5 50 msec 49 msec 48 msec

4 2001:1ab::6 105 msec 86 msec 88 msec

RP/0/RP0/CPU0:R1#Here we see something a bit unexpected. R3 is actually popping the top label and forwarding it on natively:

But why is this? If you have an incoming label but no outgoing label isn’t that, by definition, a broken LSP? So why does Cisco forward the packet natively?

Well, for this I’m going to take a quote from the Segment Routing Part 1 book (again found on segment-routing.net). Granted this isn’t an RFC, but it does a good job of explaining the Cisco IOS-XR behaviour.

If the incoming packet has a single label … (the label has the End of Stack (EOS) bit set to indicate it is the last label), then the label is removed and the packet is forwarded as an IP packet. If the incoming packet has more than one label … then the packet is dropped and this would be the erroneous termination of the LSP that we referred to previously.

Segment Routing, Part 1 by by Clarence Filsfils , Kris Michielsen , et al.

What’s happening with R3 here is MPLS2IP behaviour (since the LSP is ending and the packet is being forwarded natively). Based on the above, I believe the rule that R3 is following when deciding how to forward the incoming packet works like this:

- If there is one SR label with EoS bit set, then Forward on natively

- Else, treat as broken LSP and drop

Both CEF and the LFIB reflect this behaviour with Unlabelled as the outgoing label:

RP/0/RP0/CPU0:R3#show mpls forwarding labels 17606

Wed Nov 23 00:55:21.324 UTC

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

17606 Unlabelled SR Pfx (idx 606) Gi0/0/0/2 fe80::5200:ff:fe05:1 \

778986

RP/0/RP0/CPU0:R3#sh cef mpls local-label 17606 EOS

Wed Nov 23 00:55:27.537 UTC

Label/EOS 17606/1, version 27, labeled SR, internal 0x1000001 0x82 (ptr 0xd3575b0) [1], 0x0 (0xe3a44a8), 0xa20 (0xe4dc3a8)

Updated Jan 10 13:37:10.343

remote adjacency to GigabitEthernet0/0/0/2

Prefix Len 21, traffic index 0, precedence n/a, priority 1

via fe80::5200:ff:fe05:1/128, GigabitEthernet0/0/0/2, 8 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0xd576738 0x0]

next hop fe80::5200:ff:fe05:1/128

remote adjacency

local label 17606 labels imposed {None}But why forwarding if only one label?

I believe that Cisco is making the assumption that if there is only one label, that label is likely to be a transport label. The would imply that the underlying IPv6 address is an endpoint loopback address in the IGP, which any subsequence P router would most likely know. This allows traffic to be forwarded on in brownfield migration scenario similar to our lab.

If there is more that one label, then it would seem prudent to drop it as any underlying labels are likely to be VPN or service labels that the next P router would not understand.

I can’t be sure that this is the reasoning Cisco were going for, but it seems reasonable to me.

Now the we know how Cisco does it, let’s look at how Arista’s tackles the same scenario. Just like with IPv4 we’ll flip the path and retry the traceroute:

RP/0/RP0/CPU0:R2(config)#interface GigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#interface GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#no shut

RP/0/RP0/CPU0:R2(config-if)#commit

Wed Nov 23 00:57:01.906 UTC

LC/0/0/CPU0:Wed Nov 23 00:57:02.486 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Down

LC/0/0/CPU0:Wed Nov 23 00:57:02.533 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Up

RP/0/RP0/CPU0:R2(config-if)#RP/0/RP0/CPU0:R1#traceroute ipv6 2001:1ab::6 source lo0

Wed Nov 23 01:00:12.976 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 * * *

2 * * *

3 * * *

4 * * *

^C

RP/0/RP0/CPU0:R1#

No Network Engineer ever likes to see a broken traceroute. But clearly something isn’t getting through.

Output when looking at the LFIB from Arista starts to give us an idea:

R4#show mpls lfib route 17606

MPLS forwarding table (Label [metric] Vias) - 0 routes

MPLS next-hop resolution allow default route: False

Via Type Codes:

M - MPLS via, P - Pseudowire via,

I - IP lookup via, V - VLAN via,

VA - EVPN VLAN aware via, ES - EVPN ethernet segment via,

VF - EVPN VLAN flood via, AF - EVPN VLAN aware flood via,

NG - Nexthop group via

Source Codes:

G - gRIBI, S - Static MPLS route,

B2 - BGP L2 EVPN, B3 - BGP L3 VPN,

R - RSVP, LP - LDP pseudowire,

L - LDP, M - MLDP,

IP - IS-IS SR prefix segment, IA - IS-IS SR adjacency segment,

IL - IS-IS SR segment to LDP, LI - LDP to IS-IS SR segment,

BL - BGP LU, ST - SR TE policy,

DE - Debug LFIB

R4#

Unlike Cisco, there is no outgoing entry in the LFIB on the Arista for 17606.

Interestingly though, tracing does work directly from R4 to R6:

R4#traceroute ipv6 2001:1ab::6 source 2001:1ab::4

traceroute to 2001:1ab::6 (2001:1ab::6), 30 hops max, 80 byte packets

1 2001:1ab:4:5::5 (2001:1ab:4:5::5) 5.145 ms 9.544 ms 10.500 ms

2 2001:1ab::6 (2001:1ab::6) 95.139 ms 94.834 ms 96.574 ms

R4#This ping is a case of IP2IP forwarding. Arista, being aware that it has no label for the next-hop, forwards it natively. It’s similar to Cisco, but Cisco have an aforementioned MPLS2IP rule that bridges the two parts.

To begin troubleshooting the Arista, let’s check the basics. We already know that there is no LFIB entry for 17606. We’d expect to see it at the bottom of this table…

R4#show mpls lfib route detail | inc 17

IP 17001 [1], 10.1.1.1/32

via M, 10.2.4.2, swap 17001

IP 17002 [1], 10.1.1.2/32

IL 17003 [1], 10.1.1.3/32

via M, 10.4.5.5, swap 17

IP 17005 [1], 10.1.1.5/32

IL 17006 [1], 10.1.1.6/32

IP 17601 [1], 2001:1ab::1/128

via M, fe80::5200:ff:fe03:5, swap 17601

IP 17602 [1], 2001:1ab::2/128

via M, 10.2.4.2, swap 17001

via M, 10.4.5.5, swap 17

R4#Perhaps R4 is not getting the correct Segment Routing information. We know from our initial config check that R6 is configured correctly. When SR is enabled on a device (no matter the vendor) an SR-Capability sub-TLV is added under the Router Capability TLV. This essentially signals that it is SR capable as well as various other SR aspects.

We can see that R4 is aware that R6 is SR enabled and gets all of the correct Node-SID information:

R4#sh isis database R6.00-00 detail

IS-IS Instance: LAB VRF: default

IS-IS Level 2 Link State Database

LSPID Seq Num Cksum Life IS Flags

R6.00-00 3184 38204 1104 L2 <>

Remaining lifetime received: 1198 s Modified to: 1200 s

<snip>

IS Neighbor (MT-IPv6): R5.00 Metric: 10

Adj-sid: 16312 flags: [ L V F ] weight: 0x0

Reachability : 10.1.1.6/32 Metric: 0 Type: 1 Up

SR Prefix-SID: 6 Flags: [ N ] Algorithm: 0

Reachability (MT-IPv6): 2001:1ab::6/128 Metric: 0 Type: 1 Up

SR Prefix-SID: 606 Flags: [ N ] Algorithm: 0

Router Capabilities: Router Id: 10.1.1.6 Flags: [ ]

SR Local Block:

SRLB Base: 15000 Range: 1000

SR Capability: Flags: [ I V ]

SRGB Base: 17000 Range: 7000

Algorithm: 0

Algorithm: 1

Segment Binding: Flags: [ ] Weight: 0 Range: 1 Pfx 10.1.1.5/32

SR Prefix-SID: 5 Flags: [ ] Algorithm: 0

R4#show isis segment-routing prefix-segments vrf all

System ID: 1111.1111.0004 Instance: 'LAB'

SR supported Data-plane: MPLS SR Router ID: 10.1.1.4

Node: 10 Proxy-Node: 1 Prefix: 0 Total Segments: 11

Flag Descriptions: R: Re-advertised, N: Node Segment, P: no-PHP

E: Explicit-NULL, V: Value, L: Local

Segment status codes: * - Self originated Prefix, L1 - level 1, L2 - level 2, ! - SR-unreachable,

# - Some IS-IS next-hops are SR-unreachable

Prefix SID Type Flags System ID Level Protection

------------------------- ----- ---------- ----------------------- --------------- ----- ---

<snip>

2001:1ab::1/128 601 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0001 L2 unprotected

2001:1ab::2/128 602 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0002 L2 unprotected

2001:1ab::3/128 603 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0003 L2 unprotected

* 2001:1ab::4/128 604 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0004 L2 unprotected

2001:1ab::6/128 606 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0006 L2 unprotected

R4#So far so good. But why no LFIB entry? Well, for us to understand what is happening here, we need to understand how Arista programs its LFIB.

When Arista forwards using Segment Routing, the entry is first assigned in this SR-bindings table and only then does it enters the LFIB. We can see it makes it into the SR-bindings table:

R4#show mpls segment-routing bindings ipv6

2001:1ab::1/128

Local binding: Label: 17601

Remote binding: Peer ID: 1111.1111.0002, Label: 17601

2001:1ab::2/128

Local binding: Label: 17602

Remote binding: Peer ID: 1111.1111.0002, Label: imp-null

2001:1ab::3/128

Local binding: Label: 17603

Remote binding: Peer ID: 1111.1111.0002, Label: 17603

2001:1ab::4/128

Local binding: Label: imp-null

Remote binding: Peer ID: 1111.1111.0002, Label: 17604

2001:1ab::6/128

Local binding: Label: 17606

Remote binding: Peer ID: 1111.1111.0002, Label: 17606

R4#But why does it not then enter the LFIB? I believe that what is happening here is that it fails to program the LFIB based on the rules outlined above, namely:

- Local label: The Node SID + the SRGB Base (in our case 6 + 17000 = 17006)

- Outbound label: The Node SID + the SRGB Base of the next-hop for that prefix

The outbound label can’t be determined since the IGP network hop to 2001:1ab::6 is R5, a device that isn’t running SR. With no LDP to inherit from (since there is not LDP for IPv6) and without a special rule to forward natively (like Cisco has) the LFIB is never programmed and the packet is dropped!

Note that in the above SR-bindings table there are Remote Bindings. But these are all from R2 (the Peer ID of 1111.1111.0002 is the ISIS the system ID of R2) which is not the IGP next hop.

NB. If you are doing packet traces on a physical appliance, the “show cpu counters queue summary” command will reveal the “CoppSystemMplsLabelMiss” Packets incrementing as traffic is dropped during the traceroute. I’ve omitted is here as the command won’t work in a virtual lab environment.

So who is correct?

The obvious question at this point becomes, who is correct? Yes the traffic gets through the Cisco, but isn’t it kind of violating the principle of a broken LSP. After-all if LDP goes down between two devices in an SP core, don’t we want to avoid using that link? Isn’t that the idea behind things like LDP-IGP Sync? What if Cisco forwards an MPLS2IP packet natively and the next hop sends the packet somewhere unintended? I imagine situations like this would be rare, but maybe Arista are right playing it safe and dropping it?

I’ve tried to find an authoritative source by going on an RFC hunt – with hope of using it to determine what behaviour ought to be followed.

Unfortunately, I couldn’t find a direct reference in any RFC. The closest reference I could get was a brief mention in RFC 8661 in the MPLS2MPLS, MPLS2IP, and IP2MPLS Coexistence section.

The same applies for the MPLS2IP forwarding entries. MPLS2IP is the forwarding behavior where a router receives a labeled IPv4/IPv6 packet with one label only, pops the label, and switches the packet out as IPv4/IPv6.

RFC 8661 Section 2.1

This does little more than reference the existence of MPLS2IP forwarding. It certainly doesn’t tell us the correct behaviour. If anyone knows of an authority to resolve this, please feel free to let me know! Unfortunately at this stage, each vendors appears free to program whatever forwarding behaviour they like.

To that end, I put it to you, what do you think is the best behaviour in scenarios like this?

My personal preference is the Cisco option, because it allows for brown field migrations like those that we encountered. Without an IPv6 label distribution tool, or without reverting to 6PE, this behaviour I believe is warranted. The most likely worst case scenario is that the next hop router will simple discard the packet due to not having a route – however I concede there might be scenarios where this could be problematic.

Until there is consistency between vendors we’ll need ways to work around scenarios like this. Let’s take a look at a few.

Solutions

Sadly most of the solutions to this are suitably dull. They either involve removing the IPv6 next hop, the label, or both…

Remove the IPv6 Node SID

This is perhaps the simplest option. By removing the IPv6 node SID from R6, R1 would have no entry for R6 in its LFIB and as a result would forward the traffic natively. We can demonstrate this by doing the following:

! The link via the Cisco device R3 is admin down to make sure traffic travels via the Arista

RP/0/RP0/CPU0:R6(config)#router isis LAB

RP/0/RP0/CPU0:R6(config-isis)# interface Loopback0

RP/0/RP0/CPU0:R6(config-isis-if)# address-family ipv6 unicast

RP/0/RP0/CPU0:R6(config-isis-if-af)#no prefix-sid absolute 17606

RP/0/RP0/CPU0:R6(config-isis-if-af)#commit

Wed Nov 23 01:10:43.103 UTC

RP/0/RP0/CPU0:Wed Nov 23 01:10:45.432 UTC: config[67901]: %MGBL-CONFIG-6-DB_COMMIT : Configuration committed by user 'user1'. Use 'show configuration commit changes 1000000015' to view the changes.

RP/0/RP0/CPU0:R6(config-isis-if-af)#RP/0/RP0/CPU0:Wed Nov 23 01:10:47.089 UTC: bgp[1060]: %ROUTING-BGP-5-ADJCHANGE_DETAIL : neighbor 2001:1ab::1 Up (VRF: default; AFI/SAFI: 2/1) (AS: 100)

RP/0/RP0/CPU0:R6(config-isis-if-af)#! note the native forwardingRP/0/RP0/CPU0:R1#traceroute 2001:1ab::6 source 2001:1ab::1

Wed Nov 23 01:11:02.802 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 2001:1ab:1:2::2 79 msec 4 msec 3 msec

2 2001:1ab:2:4::4 6 msec 5 msec 5 msec

3 2001:1ab:4:5::5 8 msec 8 msec 7 msec

4 2001:1ab::6 12 msec 11 msec 9 msec

RP/0/RP0/CPU0:R1#Weight out the Arista link

I’ll only mention this in passing, since, whilst is does allow the Arista to remain live in the network for IPv4 traffic, weighting the link out is more akin to avoiding the problem rather than solving it. Here’s an example of changing the ISIS metric.

R4(config)#int eth2

R4(config-if-Et2)#isis ipv6 metric 9999

R4(config-if-Et2)#int eth4

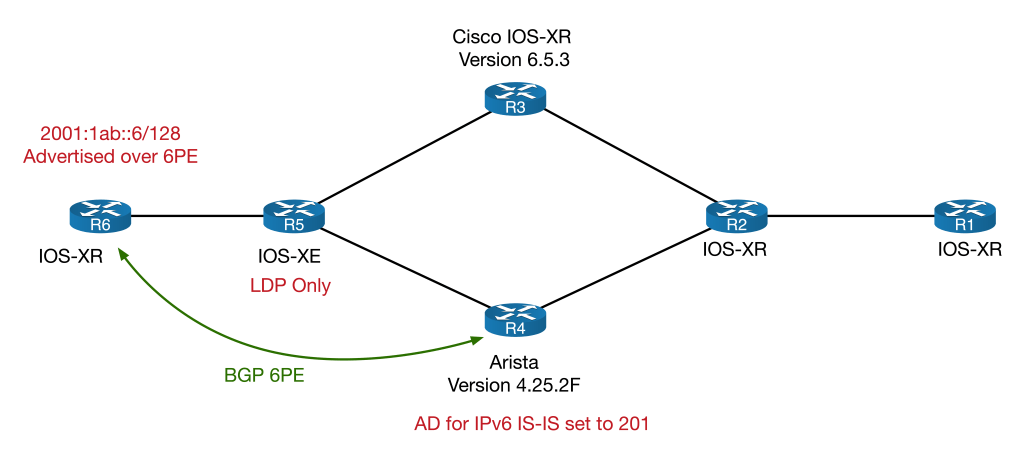

R4(config-if-Et4)#isis ipv6 metric 9999Reverting to 6PE

You might ask why this lab choses to use native BGP IPv6 in the first place, rather than use 6PE. Other than wanting to future proof the network and utilise all the benefits that come with SR, the real world scenario upon which is blog is loosely based involved 6PE bug. Basically a 6PE router was allocating one label per IPv6 prefix rather than a null label. This resulted in label exhaustion issues on the device in question. The details are beyond the scope of this blog but provides a little context. Regardless, if we use 6PE, the next hops are now IPv4 loopbacks. This allows the normal SR/LDP interoperability to take place as outlined above. Here is the basic config and verification:

RP/0/RP0/CPU0:R1(config)#router bgp 100

RP/0/RP0/CPU0:R1(config-bgp)#address-family ipv6 unicast

RP/0/RP0/CPU0:R1(config-bgp-af)#allocate-label all

RP/0/RP0/CPU0:R1(config-bgp-af)#exit

RP/0/RP0/CPU0:R1(config-bgp)#neighbor 10.1.1.6

RP/0/RP0/CPU0:R1(config-bgp-nbr)#address-family ipv6 labeled-unicast

! similar commands done on R6 not shown here

RP/0/RP0/CPU0:R1#sh bgp ipv6 labeled-unicast

Wed Nov 23 01:17:39.800 UTC

BGP router identifier 10.1.1.1, local AS number 100

BGP generic scan interval 60 secs

Non-stop routing is enabled

BGP table state: Active

Table ID: 0xe0800000 RD version: 4

BGP main routing table version 4

BGP NSR Initial initsync version 2 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

BGP scan interval 60 secs

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 2001:cafe:1::/64 2001:db8:1::1 0 0 65489 i

*>i2001:cafe:2::/64 10.1.1.6 0 100 0 65489 i

Processed 2 prefixes, 2 paths

RP/0/RP0/CPU0:R1#CE1#traceroute 2001:cafe:2::1 source 2001:cafe:1::1

Type escape sequence to abort.

Tracing the route to 2001:CAFE:2::1

1 2001:DB8:1:: 4 msec 3 msec 1 msec

2 * * *

3 * * *

4 ::FFFF:10.4.5.5 [MPLS: Labels 20/16302 Exp 0] 15 msec 14 msec 26 msec

5 * * *

6 2001:DB8:2::1 15 msec 14 msec 10 msec

CE1#Implement Segment Routing using IPv6 Headers

I’ll only mention this is passing as I’ve not seen this implemented in the wild and am not sure if my lab environment would support it. Suffice it to say that if this could be implemented, it would remove the need for LDP labels entirely.

6PE from P router to PE

I toyed with this idea out of curiosity more than anything else. I’d not recommend this for a real world deployment, but the general idea is as follows:

- Run 6PE between the trouble P router (in our case R4) and the destination PE router (R6)

- The PE router would advertise its IPv6 loopback over the 6PE session.

- The P router would filter it’s 6PE session to only accept PE loopback addresses

- The P router would set the Administrative Distance of the IGP to be 201, so it would prefer the iBGP AD of 200 to reach the end point.

The idea is that as soon as the traffic reaches the P router, the next hop to the IPv6 end point is not seen via the IGP but rather, via the 6PE session. The result would be that the incoming IPv6 SR label is replace with two labels – the bottom label is the 6PE label for the IPv6 endpoint address, the top label is the transport address for the IPv4 address of the 6PE peer (and SR to LDP interoperability can take over here). This might be scalable if the P router we running 6PE to the all the PEs via a router reflector and an inbound route-map only allowed in their next-hop loopbacks.

To put this in diagram form, it would look like this:

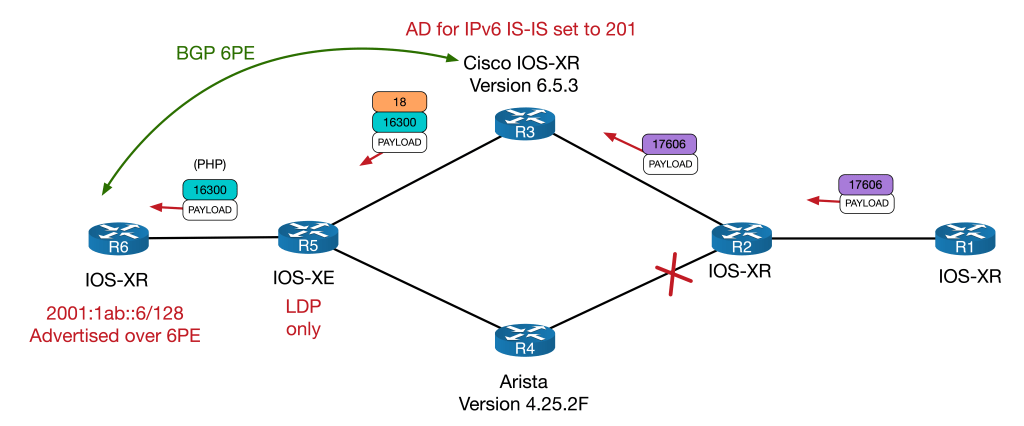

Unfortunately my virtual Arista didn’t support 6PE. I did test the principle on my Cisco P device (R3) and it seemed to work. Here is the basic config and verification:

! R3's BGP config

router bgp 100

bgp router-id 10.1.1.3

bgp log neighbor changes detail

address-family ipv6 unicast

allocate-label all

!

neighbor 10.1.1.6

remote-as 100

update-source Loopback0

address-family ipv6 labeled-unicast

route-policy ONLY-PEs in

!

!

!

route-policy ONLY-PEs

if destination in (2001:1ab::6/128) then

pass

else

drop

endif

end-policy

! R6's BGP config

router bgp 100

bgp router-id 10.1.1.6

address-family ipv6 unicast

network 2001:1ab::6/128

allocate-label all

!

!

neighbor 10.1.1.4

remote-as 100

update-source Loopback0

address-family ipv6 labeled-unicast

!

!R3 now sees next hop via 6PE BGP session with 6PE label:

RP/0/RP0/CPU0:R3#sh route ipv6 2001:1ab::6

Wed Nov 23 01:21:43.710 UTC

Routing entry for 2001:1ab::6/128

Known via "bgp 100", distance 200, metric 0, type internal

Installed Nov 23 01:19:01.151 for 00:02:23

Routing Descriptor Blocks

::ffff:10.1.1.6, from ::ffff:10.1.1.6

Nexthop in Vrf: "default", Table: "default", IPv4 Unicast, Table Id: 0xe0000000

Route metric is 0

No advertising protos.

RP/0/RP0/CPU0:R3#sh bgp ipv6 labeled-unicast 2001:1ab::6

Wed Nov 23 01:21:45.081 UTC

BGP routing table entry for 2001:1ab::6/128

Versions:

Process bRIB/RIB SendTblVer

Speaker 3 3

Last Modified: Nov 23 01:18:58.980 for 00:01:54

Paths: (1 available, best #1)

Not advertised to any peer

Path #1: Received by speaker 0

Not advertised to any peer

Local

10.1.1.6 (metric 20) from 10.1.1.6 (10.1.1.6)

Received Label 16300

Origin IGP, metric 0, localpref 100, valid, internal, best, group-best, labeled-unicast

Received Path ID 0, Local Path ID 1, version 3

RP/0/RP0/CPU0:R3#Traceroute confirms 6PE path:

RP/0/RP0/CPU0:R1#traceroute 2001:1ab::6 source 2001:1ab::1

Wed Nov 23 01:23:24.423 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 2001:1ab:1:2::2 [MPLS: Label 17606 Exp 0] 38 msec 6 msec 5 msec

2 2001:1ab:2:3::3 [MPLS: Label 17606 Exp 0] 6 msec 5 msec 5 msec

3 ::ffff:10.3.5.5 [MPLS: Labels 18/16300 Exp 0] 5 msec 5 msec 6 msec

4 2001:1ab::6 7 msec 6 msec 6 msec

RP/0/RP0/CPU0:R1#

18 is R5’s LDP label for 10.1.1.6 and 16300 is the 6PE label for 2001:1ab::6. Whilst this technically would work, it would also mean that R3 would always use 6PE to any IPv6 endpoint it received over the 6LU session – it would however, allow the IPv6 node SID and native IPv6 session to remain in place.

Again, this was a thought exercise more than anything else – I wouldn’t recommend this for a live deployment without a lot more testing.

Conclusion

Here, we’ve seen an unexpected scenario whereby Cisco forwards single labelled SR packets natively, but Arista treats it as a broken LSP, as it arguably is. This causes problem for MPLS2IP forwarding for IPv6 packets in a brownfield migration. Ultimately it comes down the way to which each vendor choses to implement their LFIBs. At time of writing, the only real solution are to remove the IPv6 next-hop, the label or both – however it will be interesting to see moving forwarding if SRv6 or perhaps even some inter-vendor consensus could resolve this interesting quirk. Thanks so much for reading. Thoughts and comments are welcome as always.

You must be logged in to post a comment.