netquirks

Exploring the quirks of Network Engineering

The Label Switched Path Not Taken

With the increased introduction of Segment Routing as the label distribution method used by Service Providers, there will inevitably be clashes with the tried and true LDP protocol. Indeed, interoperation between SR and LDP is one of the most important features to consider when introducing SR into a network. But what happen if there is no SR-LDP interoperation to be had? This is definitely the case for IPv6, since LDP for IPv6 is, more often than not, non-existent. This is exactly what this quirk will explore. More specifically, it explores how two different vendors, namely Cisco and Arista, tackle an LSP problem involving native IPv6 MPLS2IP forwarding.

I’ll begin by showing the topology and then give an example of how basic IPv4 SR to LDP interoperation works. We’ll then look at a similar scenario using IPv6 and explore how each vendor behaves. There is no “right answer” to this situation, as neither vendor violates any RFC (at least none that I can find), but it is an interesting exploration of how each approach the same problem.

Setup

I’ll start with a disclaimer, in that this quirk applies to the following software versions in a lab environment:

- Cisco IOS-XR 6.5.3

- Arista 4.25.2F

There is nothing I have seen in either Release Notes, or real world deployments that would make me think that the behaviour described here wouldn’t be the same on the latest releases – but it’s worth keeping in mind. With that said, let’s look at the setup…

The topology we will look at is as follows:

An EVE-NG lab and the base configs can be downloaded here:

The EVE lab has all interfaces unshut. The goal here is for the CE subnets to reach each other. To accomplish this R1 and R6 will run BGP sessions between their loopbacks. In this state, IPv6 forwarding will be broken – but we’ll explore that as we go!

I’ve made the network fairly straightforward to allow us to focus on the quirk. Every device except for R5 runs SR with point-to-point L2 ISIS as the underlaying IGP. The SRGB base is 17000 on all devices. The IPv4 Node SID for each router is its router number. The IPv6 Node SID is the router number plus 600. R5 is an LDP only node and as such, will need a mapping server to advertise it’s node-SID throughout the network – R6 fulfils this role.

To explore the quirk, we will look at forwarding from R1 to R6, loopback-to-loopback. Notice that R3 (a Cisco) and R4 (an Arista) sit on the SR/LDP boundary. IPv4 will be looked at first, to help explain the interoperation between SR and LDP. Once that is done, I’ll demonstrate how each vendor handles IPv6 forwarding differently, which results in forwarding problems.

For now we won’t look at the PE-CE BGP sessions since without the iBGP sessions, our core control plan is broken.

To get the lay of the land let’s check the config on R6 (our destination) and make sure R1’s LFIB is configured correctly.

RP/0/RP0/CPU0:R6#sh run router isis

Wed Nov 23 00:04:51.370 UTC

router isis LAB

is-type level-2-only

net 49.0100.1111.1111.0006.00

log adjacency changes

address-family ipv4 unicast

metric-style wide

advertise passive-only

segment-routing mpls sr-prefer

segment-routing prefix-sid-map advertise-local

!

address-family ipv6 unicast

metric-style wide

advertise passive-only

segment-routing mpls sr-prefer

!

interface Loopback0

passive

address-family ipv4 unicast

prefix-sid absolute 17006

!

address-family ipv6 unicast

prefix-sid absolute 17606

!

!

interface GigabitEthernet0/0/0/3

point-to-point

address-family ipv4 unicast

!

address-family ipv6 unicast

!

!

!

RP/0/RP0/CPU0:R6#sh run segment-routing

Wed Nov 23 00:05:13.681 UTC

segment-routing

global-block 17000 23999

mapping-server

prefix-sid-map

address-family ipv4

10.1.1.5/32 5 range 1

!

!

!

!

RP/0/RP0/CPU0:R6#The same SRGB is configured on all devices. We can see that R6 has SID index 6 for its IPv4 loopback and index 606 for its IPv6 loopback.

With these two pieces of information, we’d expect the CEF table for R1 to use 17006 and 17606 to forward to R6s IPv4 and IPv6 loopbacks respectively….

RP/0/RP0/CPU0:R1#show cef ipv4 10.1.1.6/32

Wed Nov 23 00:11:57.523 UTC

10.1.1.6/32, version 35, labeled SR, internal 0x1000001 0x83 (ptr 0xde0be70) [1], 0x0 (0xdfcd3a8), 0xa28 (0xe4dc2e8)

Updated Nov 22 17:34:26.975

remote adjacency to GigabitEthernet0/0/0/0

Prefix Len 32, traffic index 0, precedence n/a, priority 1

via 10.1.2.2/32, GigabitEthernet0/0/0/0, 6 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0xecbd140 0x0]

next hop 10.1.2.2/32

remote adjacency

local label 17006 labels imposed {17006}

RP/0/RP0/CPU0:R1#show cef ipv6 2001:1ab::6/128

Wed Nov 23 00:11:59.542 UTC

2001:1ab::6/128, version 32, labeled SR, internal 0x1000001 0x82 (ptr 0xe0db6ac) [1], 0x0 (0xe29a428), 0xa28 (0xe4dc268)

Updated Nov 22 17:34:26.976

remote adjacency to GigabitEthernet0/0/0/0

Prefix Len 128, traffic index 0, precedence n/a, priority 1

via fe80::5200:ff:fe03:3/128, GigabitEthernet0/0/0/0, 6 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0xd2656a0 0x0]

next hop fe80::5200:ff:fe03:3/128

remote adjacency

local label 17606 labels imposed {17606}

RP/0/RP0/CPU0:R1#So far so good. Let’s start with a full IPv4 traceroute and see how SR interoperates with LDP.

IPv4 connectivity and LDP interoperability

We’ll look at this by first examining Cisco’s behaviour, so let’s shutdown the R2 to R4 Arista link (Gi0/0/0/2)…

RP/0/RP0/CPU0:R2(config)#int GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#commitFrom here, we do a basic traceroute:

RP/0/RP0/CPU0:R1#traceroute 10.1.1.6 source lo0

Wed Nov 23 00:13:59.116 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.6

1 10.1.2.2 [MPLS: Label 17006 Exp 0] 152 msec 142 msec 135 msec

2 10.2.3.3 [MPLS: Label 17006 Exp 0] 142 msec 148 msec 146 msec

3 10.3.5.5 [MPLS: Label 18 Exp 0] 140 msec 137 msec 135 msec

4 10.5.6.6 148 msec * 126 msec

RP/0/RP0/CPU0:R1#

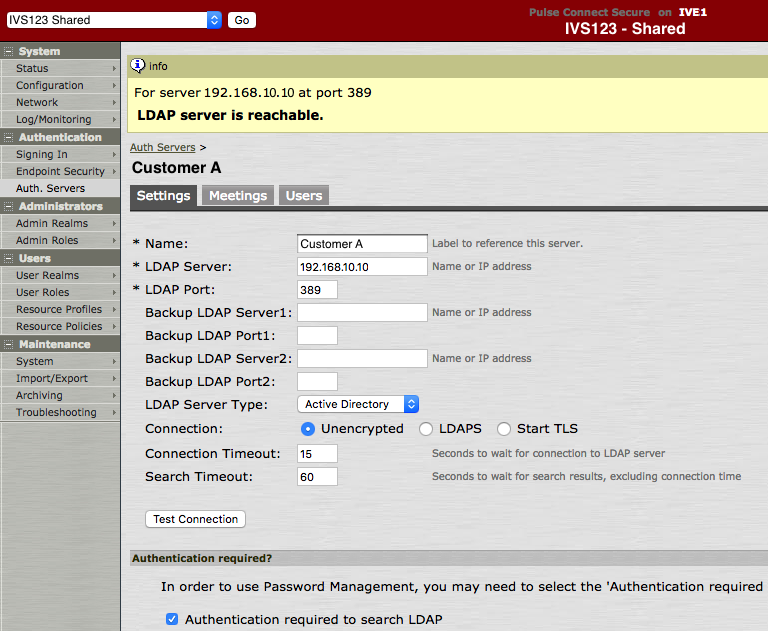

Here’s a visual diagram of what is happening:

Let’s look a bit closer at what is happening here. How does R3 program its LFIB? For segment routing the LFIB programming works like this:

- Local label: The Node SID + the SRGB Base (in our case 6 + 17000 = 17006)

- Outbound label: The Node SID + the SRGB Base of the next-hop for that prefix

Now if the next-hop was SR capable, and the SRGB was contiguous through the domain (e.g. it was 17000 everywhere), the outbound label would be 17006 as well. But here, R5 is not SR capable. It isn’t advertising any SR TLV information in its ISIS LDPUs. But it does have LDPs sessions with all of its neighbors, including R3.

R5#sh mpls ldp neighbor | inc Peer|Gig

Peer LDP Ident: 10.1.1.4:0; Local LDP Ident 10.1.1.5:0

GigabitEthernet1, Src IP addr: 10.4.5.4

Peer LDP Ident: 10.1.1.6:0; Local LDP Ident 10.1.1.5:0

GigabitEthernet3, Src IP addr: 10.5.6.6

Peer LDP Ident: 10.1.1.3:0; Local LDP Ident 10.1.1.5:0

GigabitEthernet2, Src IP addr: 10.3.5.3

R5#As you might be able to predict, this is where the SR to LDP interoperation comes into play. R5 will have advertised its local label for 10.1.1.6 to R3.

RP/0/RP0/CPU0:R3#sh mpls ldp ipv4 bindings 10.1.1.6/32

Wed Nov 23 00:14:23.126 UTC

10.1.1.6/32, rev 17

Local binding: label: 16300

Remote bindings: (1 peers)

Peer Label

----------------- ---------

10.1.1.5:0 18

RP/0/RP0/CPU0:R3#So R5 will use this instead! The basic principle is as follows:

Interworking is achieved by replacing an unknown outbound label from one protocol by a valid outgoing label from another protocol.

SR is basically “inheriting” from LDP. R3’s forwarding table looks as follows:

RP/0/RP0/CPU0:R3#sh mpls forwarding labels 17006

Wed Nov 23 00:26:02.364 UTC

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

17006 18 SR Pfx (idx 6) Gi0/0/0/2 10.3.5.5 3808

RP/0/RP0/CPU0:R3#The local label is the SR label and the outbound label is the LDP label of 18. You can see from the traceroute that this indeed the label used.

RP/0/RP0/CPU0:R1#traceroute 10.1.1.6 source lo0

Wed Nov 23 00:13:59.116 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.6

1 10.1.2.2 [MPLS: Label 17006 Exp 0] 152 msec 142 msec 135 msec

2 10.2.3.3 [MPLS: Label 17006 Exp 0] 142 msec 148 msec 146 msec

3 10.3.5.5 [MPLS: Label 18 Exp 0] 140 msec 137 msec 135 msec

4 10.5.6.6 148 msec * 126 msec

RP/0/RP0/CPU0:R1#NB. I’ve missed a couple of details here, namely how LDP to SR works in the opposite direction in conjunction with mapping server statements. These don’t directly relate to our quirk here, since we’re focusing on R1 to R6 traffic, but I’d recommend reading the Segment Routing Book Series found on segment-routing.net to get the full details of SR/LDP interoperability.

Now that we’ve verified forwarding through the Cisco, we’ll switch to the Arista path to ensure that the behaviour is identical. First we’ll shutdown R2’s uplink to R3 (Gi0/0/0/1) and unshut its uplink to R4 (Gi0/0/0/2), before rerunning the traceroute:

RP/0/RP0/CPU0:R2(config)#int GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#no shut

RP/0/RP0/CPU0:R2(config-if)#int GigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#commit

Wed Nov 23 00:40:25.104 UTC

LC/0/0/CPU0:Nov 23 00:40:25.219 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Down

LC/0/0/CPU0:Nov 23 00:40:25.318 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Up

RP/0/RP0/CPU0:R2(config-if)#

RP/0/RP0/CPU0:R1#traceroute 10.1.1.6 source lo0

Wed Nov 23 00:41:12.857 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.6

1 10.1.2.2 [MPLS: Label 17006 Exp 0] 57 msec 54 msec 56 msec

2 * * *

3 10.4.5.5 [MPLS: Label 18 Exp 0] 60 msec 53 msec 51 msec

4 10.5.6.6 60 msec * 62 msec

RP/0/RP0/CPU0:R1#Success! It’ using the LDP label 18 just as before and if we run some of the Arista CLI commands we see similar inheritance behaviour to the Cisco:

R4#show mpls lfib route | begin 10.1.1.6/32

IL 17006 [1], 10.1.1.6/32

via M, 10.4.5.5, swap 18

payload autoDecide, ttlMode uniform, apply egress-acl

interface Ethernet1

<<snip>>Here’s the diagram:

So IPv4 looks solid. If no SR, then fall back to LDP. But what happens if we use IPv6? There is no LDP for IPv6. More importantly though… what should happen? Let’s explore what both vendors do and then you can make up your own mind.

IPv6 Connectivity and LDP

Let’s flip back to Cisco and see what the traceroute looks like:

RP/0/RP0/CPU0:R2(config-if)#int GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#int GigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R2(config-if)#no shut

RP/0/RP0/CPU0:R2(config-if)#commit

Wed Nov 23 00:49:08.711 UTC

LC/0/0/CPU0:Nov 23 00:49:08.787 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/1, changed state to Down

LC/0/0/CPU0:Nov 23 00:49:08.837 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/1, changed state to Up

RP/0/RP0/CPU0:R2(config-if)#

RP/0/RP0/CPU0:R1#traceroute ipv6 2001:1ab::6 source lo0

Wed Nov 23 00:50:51.770 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 2001:1ab:1:2::2 [MPLS: Label 17606 Exp 0] 261 msec 48 msec 47 msec

2 2001:1ab:2:3::3 [MPLS: Label 17606 Exp 0] 52 msec 33 msec 46 msec

3 2001:1ab:3:5::5 50 msec 49 msec 48 msec

4 2001:1ab::6 105 msec 86 msec 88 msec

RP/0/RP0/CPU0:R1#Here we see something a bit unexpected. R3 is actually popping the top label and forwarding it on natively:

But why is this? If you have an incoming label but no outgoing label isn’t that, by definition, a broken LSP? So why does Cisco forward the packet natively?

Well, for this I’m going to take a quote from the Segment Routing Part 1 book (again found on segment-routing.net). Granted this isn’t an RFC, but it does a good job of explaining the Cisco IOS-XR behaviour.

If the incoming packet has a single label … (the label has the End of Stack (EOS) bit set to indicate it is the last label), then the label is removed and the packet is forwarded as an IP packet. If the incoming packet has more than one label … then the packet is dropped and this would be the erroneous termination of the LSP that we referred to previously.

Segment Routing, Part 1 by by Clarence Filsfils , Kris Michielsen , et al.

What’s happening with R3 here is MPLS2IP behaviour (since the LSP is ending and the packet is being forwarded natively). Based on the above, I believe the rule that R3 is following when deciding how to forward the incoming packet works like this:

- If there is one SR label with EoS bit set, then Forward on natively

- Else, treat as broken LSP and drop

Both CEF and the LFIB reflect this behaviour with Unlabelled as the outgoing label:

RP/0/RP0/CPU0:R3#show mpls forwarding labels 17606

Wed Nov 23 00:55:21.324 UTC

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- ------------------ ------------ --------------- ------------

17606 Unlabelled SR Pfx (idx 606) Gi0/0/0/2 fe80::5200:ff:fe05:1 \

778986

RP/0/RP0/CPU0:R3#sh cef mpls local-label 17606 EOS

Wed Nov 23 00:55:27.537 UTC

Label/EOS 17606/1, version 27, labeled SR, internal 0x1000001 0x82 (ptr 0xd3575b0) [1], 0x0 (0xe3a44a8), 0xa20 (0xe4dc3a8)

Updated Jan 10 13:37:10.343

remote adjacency to GigabitEthernet0/0/0/2

Prefix Len 21, traffic index 0, precedence n/a, priority 1

via fe80::5200:ff:fe05:1/128, GigabitEthernet0/0/0/2, 8 dependencies, weight 0, class 0 [flags 0x0]

path-idx 0 NHID 0x0 [0xd576738 0x0]

next hop fe80::5200:ff:fe05:1/128

remote adjacency

local label 17606 labels imposed {None}But why forwarding if only one label?

I believe that Cisco is making the assumption that if there is only one label, that label is likely to be a transport label. The would imply that the underlying IPv6 address is an endpoint loopback address in the IGP, which any subsequence P router would most likely know. This allows traffic to be forwarded on in brownfield migration scenario similar to our lab.

If there is more that one label, then it would seem prudent to drop it as any underlying labels are likely to be VPN or service labels that the next P router would not understand.

I can’t be sure that this is the reasoning Cisco were going for, but it seems reasonable to me.

Now the we know how Cisco does it, let’s look at how Arista’s tackles the same scenario. Just like with IPv4 we’ll flip the path and retry the traceroute:

RP/0/RP0/CPU0:R2(config)#interface GigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R2(config-if)#shut

RP/0/RP0/CPU0:R2(config-if)#interface GigabitEthernet 0/0/0/2

RP/0/RP0/CPU0:R2(config-if)#no shut

RP/0/RP0/CPU0:R2(config-if)#commit

Wed Nov 23 00:57:01.906 UTC

LC/0/0/CPU0:Wed Nov 23 00:57:02.486 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Down

LC/0/0/CPU0:Wed Nov 23 00:57:02.533 UTC: ifmgr[270]: %PKT_INFRA-LINK-3-UPDOWN : Interface GigabitEthernet0/0/0/2, changed state to Up

RP/0/RP0/CPU0:R2(config-if)#RP/0/RP0/CPU0:R1#traceroute ipv6 2001:1ab::6 source lo0

Wed Nov 23 01:00:12.976 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 * * *

2 * * *

3 * * *

4 * * *

^C

RP/0/RP0/CPU0:R1#

No Network Engineer ever likes to see a broken traceroute. But clearly something isn’t getting through.

Output when looking at the LFIB from Arista starts to give us an idea:

R4#show mpls lfib route 17606

MPLS forwarding table (Label [metric] Vias) - 0 routes

MPLS next-hop resolution allow default route: False

Via Type Codes:

M - MPLS via, P - Pseudowire via,

I - IP lookup via, V - VLAN via,

VA - EVPN VLAN aware via, ES - EVPN ethernet segment via,

VF - EVPN VLAN flood via, AF - EVPN VLAN aware flood via,

NG - Nexthop group via

Source Codes:

G - gRIBI, S - Static MPLS route,

B2 - BGP L2 EVPN, B3 - BGP L3 VPN,

R - RSVP, LP - LDP pseudowire,

L - LDP, M - MLDP,

IP - IS-IS SR prefix segment, IA - IS-IS SR adjacency segment,

IL - IS-IS SR segment to LDP, LI - LDP to IS-IS SR segment,

BL - BGP LU, ST - SR TE policy,

DE - Debug LFIB

R4#

Unlike Cisco, there is no outgoing entry in the LFIB on the Arista for 17606.

Interestingly though, tracing does work directly from R4 to R6:

R4#traceroute ipv6 2001:1ab::6 source 2001:1ab::4

traceroute to 2001:1ab::6 (2001:1ab::6), 30 hops max, 80 byte packets

1 2001:1ab:4:5::5 (2001:1ab:4:5::5) 5.145 ms 9.544 ms 10.500 ms

2 2001:1ab::6 (2001:1ab::6) 95.139 ms 94.834 ms 96.574 ms

R4#This ping is a case of IP2IP forwarding. Arista, being aware that it has no label for the next-hop, forwards it natively. It’s similar to Cisco, but Cisco have an aforementioned MPLS2IP rule that bridges the two parts.

To begin troubleshooting the Arista, let’s check the basics. We already know that there is no LFIB entry for 17606. We’d expect to see it at the bottom of this table…

R4#show mpls lfib route detail | inc 17

IP 17001 [1], 10.1.1.1/32

via M, 10.2.4.2, swap 17001

IP 17002 [1], 10.1.1.2/32

IL 17003 [1], 10.1.1.3/32

via M, 10.4.5.5, swap 17

IP 17005 [1], 10.1.1.5/32

IL 17006 [1], 10.1.1.6/32

IP 17601 [1], 2001:1ab::1/128

via M, fe80::5200:ff:fe03:5, swap 17601

IP 17602 [1], 2001:1ab::2/128

via M, 10.2.4.2, swap 17001

via M, 10.4.5.5, swap 17

R4#Perhaps R4 is not getting the correct Segment Routing information. We know from our initial config check that R6 is configured correctly. When SR is enabled on a device (no matter the vendor) an SR-Capability sub-TLV is added under the Router Capability TLV. This essentially signals that it is SR capable as well as various other SR aspects.

We can see that R4 is aware that R6 is SR enabled and gets all of the correct Node-SID information:

R4#sh isis database R6.00-00 detail

IS-IS Instance: LAB VRF: default

IS-IS Level 2 Link State Database

LSPID Seq Num Cksum Life IS Flags

R6.00-00 3184 38204 1104 L2 <>

Remaining lifetime received: 1198 s Modified to: 1200 s

<snip>

IS Neighbor (MT-IPv6): R5.00 Metric: 10

Adj-sid: 16312 flags: [ L V F ] weight: 0x0

Reachability : 10.1.1.6/32 Metric: 0 Type: 1 Up

SR Prefix-SID: 6 Flags: [ N ] Algorithm: 0

Reachability (MT-IPv6): 2001:1ab::6/128 Metric: 0 Type: 1 Up

SR Prefix-SID: 606 Flags: [ N ] Algorithm: 0

Router Capabilities: Router Id: 10.1.1.6 Flags: [ ]

SR Local Block:

SRLB Base: 15000 Range: 1000

SR Capability: Flags: [ I V ]

SRGB Base: 17000 Range: 7000

Algorithm: 0

Algorithm: 1

Segment Binding: Flags: [ ] Weight: 0 Range: 1 Pfx 10.1.1.5/32

SR Prefix-SID: 5 Flags: [ ] Algorithm: 0

R4#show isis segment-routing prefix-segments vrf all

System ID: 1111.1111.0004 Instance: 'LAB'

SR supported Data-plane: MPLS SR Router ID: 10.1.1.4

Node: 10 Proxy-Node: 1 Prefix: 0 Total Segments: 11

Flag Descriptions: R: Re-advertised, N: Node Segment, P: no-PHP

E: Explicit-NULL, V: Value, L: Local

Segment status codes: * - Self originated Prefix, L1 - level 1, L2 - level 2, ! - SR-unreachable,

# - Some IS-IS next-hops are SR-unreachable

Prefix SID Type Flags System ID Level Protection

------------------------- ----- ---------- ----------------------- --------------- ----- ---

<snip>

2001:1ab::1/128 601 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0001 L2 unprotected

2001:1ab::2/128 602 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0002 L2 unprotected

2001:1ab::3/128 603 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0003 L2 unprotected

* 2001:1ab::4/128 604 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0004 L2 unprotected

2001:1ab::6/128 606 Node R:0 N:1 P:0 E:0 V:0 L:0 1111.1111.0006 L2 unprotected

R4#So far so good. But why no LFIB entry? Well, for us to understand what is happening here, we need to understand how Arista programs its LFIB.

When Arista forwards using Segment Routing, the entry is first assigned in this SR-bindings table and only then does it enters the LFIB. We can see it makes it into the SR-bindings table:

R4#show mpls segment-routing bindings ipv6

2001:1ab::1/128

Local binding: Label: 17601

Remote binding: Peer ID: 1111.1111.0002, Label: 17601

2001:1ab::2/128

Local binding: Label: 17602

Remote binding: Peer ID: 1111.1111.0002, Label: imp-null

2001:1ab::3/128

Local binding: Label: 17603

Remote binding: Peer ID: 1111.1111.0002, Label: 17603

2001:1ab::4/128

Local binding: Label: imp-null

Remote binding: Peer ID: 1111.1111.0002, Label: 17604

2001:1ab::6/128

Local binding: Label: 17606

Remote binding: Peer ID: 1111.1111.0002, Label: 17606

R4#But why does it not then enter the LFIB? I believe that what is happening here is that it fails to program the LFIB based on the rules outlined above, namely:

- Local label: The Node SID + the SRGB Base (in our case 6 + 17000 = 17006)

- Outbound label: The Node SID + the SRGB Base of the next-hop for that prefix

The outbound label can’t be determined since the IGP network hop to 2001:1ab::6 is R5, a device that isn’t running SR. With no LDP to inherit from (since there is not LDP for IPv6) and without a special rule to forward natively (like Cisco has) the LFIB is never programmed and the packet is dropped!

Note that in the above SR-bindings table there are Remote Bindings. But these are all from R2 (the Peer ID of 1111.1111.0002 is the ISIS the system ID of R2) which is not the IGP next hop.

NB. If you are doing packet traces on a physical appliance, the “show cpu counters queue summary” command will reveal the “CoppSystemMplsLabelMiss” Packets incrementing as traffic is dropped during the traceroute. I’ve omitted is here as the command won’t work in a virtual lab environment.

So who is correct?

The obvious question at this point becomes, who is correct? Yes the traffic gets through the Cisco, but isn’t it kind of violating the principle of a broken LSP. After-all if LDP goes down between two devices in an SP core, don’t we want to avoid using that link? Isn’t that the idea behind things like LDP-IGP Sync? What if Cisco forwards an MPLS2IP packet natively and the next hop sends the packet somewhere unintended? I imagine situations like this would be rare, but maybe Arista are right playing it safe and dropping it?

I’ve tried to find an authoritative source by going on an RFC hunt – with hope of using it to determine what behaviour ought to be followed.

Unfortunately, I couldn’t find a direct reference in any RFC. The closest reference I could get was a brief mention in RFC 8661 in the MPLS2MPLS, MPLS2IP, and IP2MPLS Coexistence section.

The same applies for the MPLS2IP forwarding entries. MPLS2IP is the forwarding behavior where a router receives a labeled IPv4/IPv6 packet with one label only, pops the label, and switches the packet out as IPv4/IPv6.

RFC 8661 Section 2.1

This does little more than reference the existence of MPLS2IP forwarding. It certainly doesn’t tell us the correct behaviour. If anyone knows of an authority to resolve this, please feel free to let me know! Unfortunately at this stage, each vendors appears free to program whatever forwarding behaviour they like.

To that end, I put it to you, what do you think is the best behaviour in scenarios like this?

My personal preference is the Cisco option, because it allows for brown field migrations like those that we encountered. Without an IPv6 label distribution tool, or without reverting to 6PE, this behaviour I believe is warranted. The most likely worst case scenario is that the next hop router will simple discard the packet due to not having a route – however I concede there might be scenarios where this could be problematic.

Until there is consistency between vendors we’ll need ways to work around scenarios like this. Let’s take a look at a few.

Solutions

Sadly most of the solutions to this are suitably dull. They either involve removing the IPv6 next hop, the label, or both…

Remove the IPv6 Node SID

This is perhaps the simplest option. By removing the IPv6 node SID from R6, R1 would have no entry for R6 in its LFIB and as a result would forward the traffic natively. We can demonstrate this by doing the following:

! The link via the Cisco device R3 is admin down to make sure traffic travels via the Arista

RP/0/RP0/CPU0:R6(config)#router isis LAB

RP/0/RP0/CPU0:R6(config-isis)# interface Loopback0

RP/0/RP0/CPU0:R6(config-isis-if)# address-family ipv6 unicast

RP/0/RP0/CPU0:R6(config-isis-if-af)#no prefix-sid absolute 17606

RP/0/RP0/CPU0:R6(config-isis-if-af)#commit

Wed Nov 23 01:10:43.103 UTC

RP/0/RP0/CPU0:Wed Nov 23 01:10:45.432 UTC: config[67901]: %MGBL-CONFIG-6-DB_COMMIT : Configuration committed by user 'user1'. Use 'show configuration commit changes 1000000015' to view the changes.

RP/0/RP0/CPU0:R6(config-isis-if-af)#RP/0/RP0/CPU0:Wed Nov 23 01:10:47.089 UTC: bgp[1060]: %ROUTING-BGP-5-ADJCHANGE_DETAIL : neighbor 2001:1ab::1 Up (VRF: default; AFI/SAFI: 2/1) (AS: 100)

RP/0/RP0/CPU0:R6(config-isis-if-af)#! note the native forwardingRP/0/RP0/CPU0:R1#traceroute 2001:1ab::6 source 2001:1ab::1

Wed Nov 23 01:11:02.802 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 2001:1ab:1:2::2 79 msec 4 msec 3 msec

2 2001:1ab:2:4::4 6 msec 5 msec 5 msec

3 2001:1ab:4:5::5 8 msec 8 msec 7 msec

4 2001:1ab::6 12 msec 11 msec 9 msec

RP/0/RP0/CPU0:R1#Weight out the Arista link

I’ll only mention this in passing, since, whilst is does allow the Arista to remain live in the network for IPv4 traffic, weighting the link out is more akin to avoiding the problem rather than solving it. Here’s an example of changing the ISIS metric.

R4(config)#int eth2

R4(config-if-Et2)#isis ipv6 metric 9999

R4(config-if-Et2)#int eth4

R4(config-if-Et4)#isis ipv6 metric 9999Reverting to 6PE

You might ask why this lab choses to use native BGP IPv6 in the first place, rather than use 6PE. Other than wanting to future proof the network and utilise all the benefits that come with SR, the real world scenario upon which is blog is loosely based involved 6PE bug. Basically a 6PE router was allocating one label per IPv6 prefix rather than a null label. This resulted in label exhaustion issues on the device in question. The details are beyond the scope of this blog but provides a little context. Regardless, if we use 6PE, the next hops are now IPv4 loopbacks. This allows the normal SR/LDP interoperability to take place as outlined above. Here is the basic config and verification:

RP/0/RP0/CPU0:R1(config)#router bgp 100

RP/0/RP0/CPU0:R1(config-bgp)#address-family ipv6 unicast

RP/0/RP0/CPU0:R1(config-bgp-af)#allocate-label all

RP/0/RP0/CPU0:R1(config-bgp-af)#exit

RP/0/RP0/CPU0:R1(config-bgp)#neighbor 10.1.1.6

RP/0/RP0/CPU0:R1(config-bgp-nbr)#address-family ipv6 labeled-unicast

! similar commands done on R6 not shown here

RP/0/RP0/CPU0:R1#sh bgp ipv6 labeled-unicast

Wed Nov 23 01:17:39.800 UTC

BGP router identifier 10.1.1.1, local AS number 100

BGP generic scan interval 60 secs

Non-stop routing is enabled

BGP table state: Active

Table ID: 0xe0800000 RD version: 4

BGP main routing table version 4

BGP NSR Initial initsync version 2 (Reached)

BGP NSR/ISSU Sync-Group versions 0/0

BGP scan interval 60 secs

Status codes: s suppressed, d damped, h history, * valid, > best

i - internal, r RIB-failure, S stale, N Nexthop-discard

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 2001:cafe:1::/64 2001:db8:1::1 0 0 65489 i

*>i2001:cafe:2::/64 10.1.1.6 0 100 0 65489 i

Processed 2 prefixes, 2 paths

RP/0/RP0/CPU0:R1#CE1#traceroute 2001:cafe:2::1 source 2001:cafe:1::1

Type escape sequence to abort.

Tracing the route to 2001:CAFE:2::1

1 2001:DB8:1:: 4 msec 3 msec 1 msec

2 * * *

3 * * *

4 ::FFFF:10.4.5.5 [MPLS: Labels 20/16302 Exp 0] 15 msec 14 msec 26 msec

5 * * *

6 2001:DB8:2::1 15 msec 14 msec 10 msec

CE1#Implement Segment Routing using IPv6 Headers

I’ll only mention this is passing as I’ve not seen this implemented in the wild and am not sure if my lab environment would support it. Suffice it to say that if this could be implemented, it would remove the need for LDP labels entirely.

6PE from P router to PE

I toyed with this idea out of curiosity more than anything else. I’d not recommend this for a real world deployment, but the general idea is as follows:

- Run 6PE between the trouble P router (in our case R4) and the destination PE router (R6)

- The PE router would advertise its IPv6 loopback over the 6PE session.

- The P router would filter it’s 6PE session to only accept PE loopback addresses

- The P router would set the Administrative Distance of the IGP to be 201, so it would prefer the iBGP AD of 200 to reach the end point.

The idea is that as soon as the traffic reaches the P router, the next hop to the IPv6 end point is not seen via the IGP but rather, via the 6PE session. The result would be that the incoming IPv6 SR label is replace with two labels – the bottom label is the 6PE label for the IPv6 endpoint address, the top label is the transport address for the IPv4 address of the 6PE peer (and SR to LDP interoperability can take over here). This might be scalable if the P router we running 6PE to the all the PEs via a router reflector and an inbound route-map only allowed in their next-hop loopbacks.

To put this in diagram form, it would look like this:

Unfortunately my virtual Arista didn’t support 6PE. I did test the principle on my Cisco P device (R3) and it seemed to work. Here is the basic config and verification:

! R3's BGP config

router bgp 100

bgp router-id 10.1.1.3

bgp log neighbor changes detail

address-family ipv6 unicast

allocate-label all

!

neighbor 10.1.1.6

remote-as 100

update-source Loopback0

address-family ipv6 labeled-unicast

route-policy ONLY-PEs in

!

!

!

route-policy ONLY-PEs

if destination in (2001:1ab::6/128) then

pass

else

drop

endif

end-policy

! R6's BGP config

router bgp 100

bgp router-id 10.1.1.6

address-family ipv6 unicast

network 2001:1ab::6/128

allocate-label all

!

!

neighbor 10.1.1.4

remote-as 100

update-source Loopback0

address-family ipv6 labeled-unicast

!

!R3 now sees next hop via 6PE BGP session with 6PE label:

RP/0/RP0/CPU0:R3#sh route ipv6 2001:1ab::6

Wed Nov 23 01:21:43.710 UTC

Routing entry for 2001:1ab::6/128

Known via "bgp 100", distance 200, metric 0, type internal

Installed Nov 23 01:19:01.151 for 00:02:23

Routing Descriptor Blocks

::ffff:10.1.1.6, from ::ffff:10.1.1.6

Nexthop in Vrf: "default", Table: "default", IPv4 Unicast, Table Id: 0xe0000000

Route metric is 0

No advertising protos.

RP/0/RP0/CPU0:R3#sh bgp ipv6 labeled-unicast 2001:1ab::6

Wed Nov 23 01:21:45.081 UTC

BGP routing table entry for 2001:1ab::6/128

Versions:

Process bRIB/RIB SendTblVer

Speaker 3 3

Last Modified: Nov 23 01:18:58.980 for 00:01:54

Paths: (1 available, best #1)

Not advertised to any peer

Path #1: Received by speaker 0

Not advertised to any peer

Local

10.1.1.6 (metric 20) from 10.1.1.6 (10.1.1.6)

Received Label 16300

Origin IGP, metric 0, localpref 100, valid, internal, best, group-best, labeled-unicast

Received Path ID 0, Local Path ID 1, version 3

RP/0/RP0/CPU0:R3#Traceroute confirms 6PE path:

RP/0/RP0/CPU0:R1#traceroute 2001:1ab::6 source 2001:1ab::1

Wed Nov 23 01:23:24.423 UTC

Type escape sequence to abort.

Tracing the route to 2001:1ab::6

1 2001:1ab:1:2::2 [MPLS: Label 17606 Exp 0] 38 msec 6 msec 5 msec

2 2001:1ab:2:3::3 [MPLS: Label 17606 Exp 0] 6 msec 5 msec 5 msec

3 ::ffff:10.3.5.5 [MPLS: Labels 18/16300 Exp 0] 5 msec 5 msec 6 msec

4 2001:1ab::6 7 msec 6 msec 6 msec

RP/0/RP0/CPU0:R1#

18 is R5’s LDP label for 10.1.1.6 and 16300 is the 6PE label for 2001:1ab::6. Whilst this technically would work, it would also mean that R3 would always use 6PE to any IPv6 endpoint it received over the 6LU session – it would however, allow the IPv6 node SID and native IPv6 session to remain in place.

Again, this was a thought exercise more than anything else – I wouldn’t recommend this for a live deployment without a lot more testing.

Conclusion

Here, we’ve seen an unexpected scenario whereby Cisco forwards single labelled SR packets natively, but Arista treats it as a broken LSP, as it arguably is. This causes problem for MPLS2IP forwarding for IPv6 packets in a brownfield migration. Ultimately it comes down the way to which each vendor choses to implement their LFIBs. At time of writing, the only real solution are to remove the IPv6 next-hop, the label or both – however it will be interesting to see moving forwarding if SRv6 or perhaps even some inter-vendor consensus could resolve this interesting quirk. Thanks so much for reading. Thoughts and comments are welcome as always.

TI-LFA FTW!

Having fast convergence times is one of most important aspects of running any Service Provider network. But perhaps more important is making sure that you actual have the necessary backup paths in the first place.

This quirk explores a network within which neither Classic Loop Free Alternate (LFA) nor Remote-LFA (R-LFA) provide complete backup coverage, and how Segment Routing can solve this using a technology called Topology Independent Loop Free Alternate (or TI-LFA).

This blog comes with a downloadable EVE-NG lab that can be found here. It is configured with the final TI-LFA and SR setup, but I’ll provide the configuration examples for both Classic-LFA and R-LFA as we go. We’ll just look at link protection in this post, but the principle for node and SRLG protection is similar.

I’ll assume anyone reading is well versed in the IGP + LDP Service Provider core model but will give a whistle stop introduction to Segment Routing for those who haven’t run into it yet…

Segment Routing – A Brief Introduction

Segment Routing (or SR) is one of the most exciting new technologies in the Service Provider world. On the face of it, it looks like “just another way to communicate labels” but once you dig into it, you’ll realise how powerful it can be.

This introduction will be just enough to get you to understand this post if you’ve never used SR before. I highly recommend checking out segment-routing.net for more information.

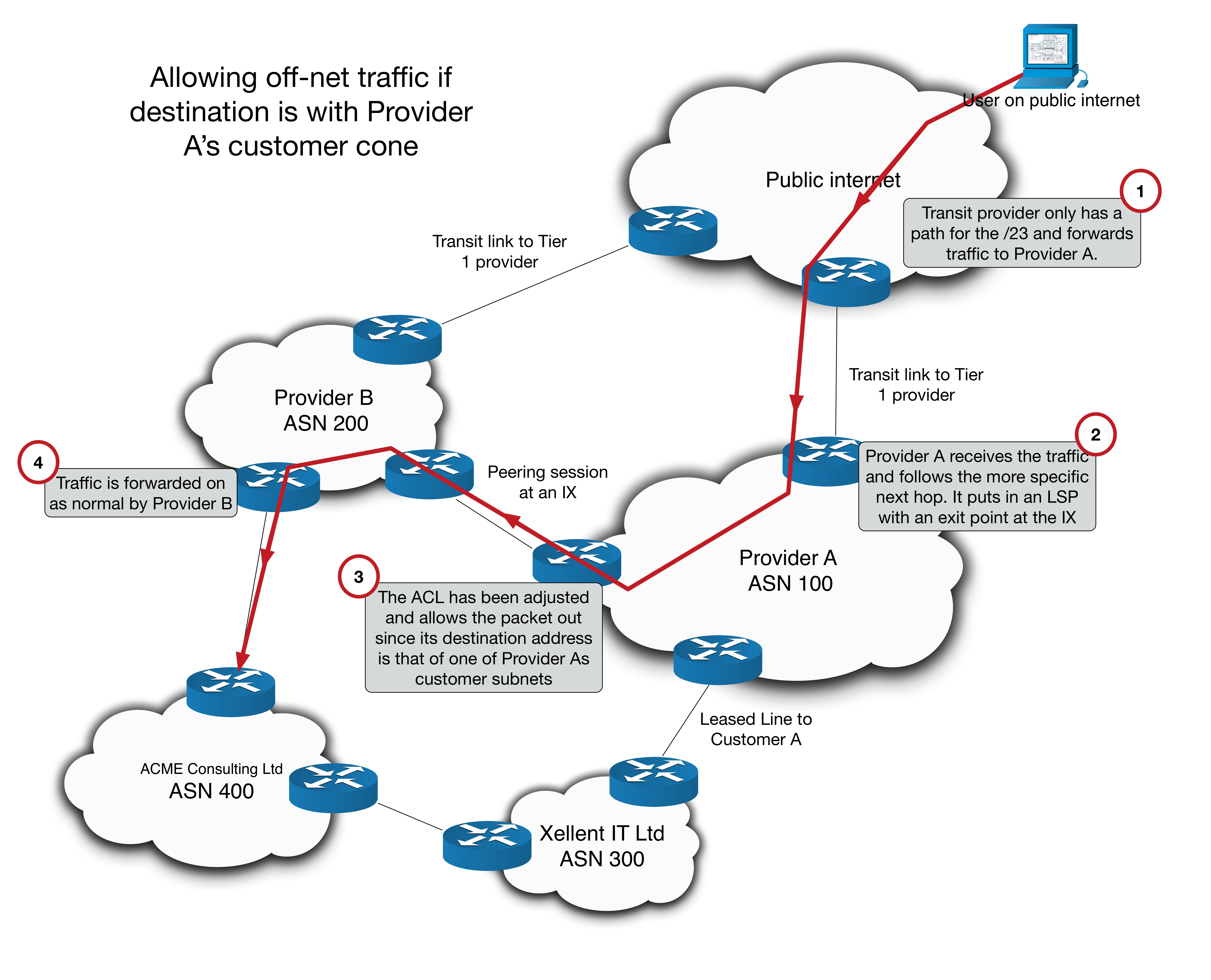

The best way to introduce SR is to compare it to LDP. So to that end, here’s a basic diagram as a reminder of how LDP works:

R3 is advertising its 1.1.1.3/32 loopback via ISIS and LDP communicates label information. This should hopefully be very familiar.

So how does SR differ?

Well, the difference is in how the label is communicated. Instead of using an extra protocol, like LDP, the label information is carried in the IGP itself. In the case of ISIS, it uses TLVs. In the case of OSPF it uses opaque LSAs (which are essentially a mechanism for carrying TLVs in and of themselves).

This means that instead of each router allocating is own label for every single prefix in its IGP routing table and then advertising these to their neighbors using multiple LDP sessions, the router that sources the prefix advertises its label information inside its IGP update packets. Only the source router actually does any allocating.

Before I show you a diagram, let’s get slightly technical about what SR is…

Segment Routing is defined as … “a source-based routing paradigm that uses stacks of instructions – referred to as Segments – to forward packets”. This might seem confusing, but basically Segments are MPLS Labels (they can also be IPv6 headers but we’ll just deal with MPLS labels here). Each label in a stack can be thought of as an instruction that tells the router what do with the packet.

There are two types of Segments (or instructions) we need to be concerned with in this post. Node-SIDs and Adj-SIDs.

- A Node-SID in a Service Provider network typically refers to a loopback address of a router that something like BGP would use as the next-hop (e.g. it puts the self in next-hop-self). This is analogous to the LDP label for a given IGP prefix that each route in an ISP core would assign. The “instruction” for a Node-SID is forward this packet on to the prefix along your best ECMP path for it.

- An Adj-SID represents a routers IGP neighborship. An Adj-SID has the “instruction” of forwarding this packet out of the interface toward this neighbor.

So how are these allocated and adverted?

Like I said before, they use the TLVs (or opaque LSAs) of the IGPs involved. But the advertisements of Adj-SIDs and Node-SIDs do differ slightly…

Adj-SIDs are (by default) automatically allocated from the default MPLS label pool (the same pool used to allocate LDP labels) and are simply advertised inside the TLV “as is”. There is more than one type of Adj-SIDs for each IGP neighbor… they come in both protected/unprotected and IPv4/IPv6 flavours. This post will only deal with IPv4 unprotected, so don’t worry about the others for now.

The Node-SID is a little more complicated. It is statically assigned under the IGP config and comes from a reserved label range called the SRGB or Segment Routing Global Block. This is a different block from the default MPLS one and the best way to understand it is to put it in context…

Let’s say your SRGB is 17000-18999. This is a range of 2000 labels. And let’s say that each router in the network get’s a Node-SID based on its router number (e.g. R5 gets Node-SID 17005 etc..). Well when a router advertises this information inside the TLV, it breaks it up into several key bits of information:

• The Prefix itself – a /32 when dealing with IPv4.

• A globally significant Index – this is just a number. In our case R5 gets index 5 and so on…

• The locally significant SRGB Base value – the first label in the Segment Routing label range. In our case the SRGB base is 17000.

• A locally significant Range value stating how big the SRGB is – for us it’s 2000

So for any given router its overall SRGB is the Base plus the Range. And because both the Base and the Range are locally significant, so is the SRGB.

What does this local significance mean?

Well it obviously means that it can differ one device to another… so when I said above “Let’s say your SRGB is 17000-18999″ I’ve assumed that all devices in the network have the same SRGB (and by extension the same Base and Range) configured. Again to best understand this, let’s continue within our current context…

Just like LDP each router installs an In and Out label in the LFIB for each prefix:

• The In label is the Index of that prefix plus its own local SRGB.

• The Out label is the Index plus the downstream neighbor’s SRGB.

Let’s put this in diagram form to illustrate. In the below diagram we are following how R4 advertises its loopback and label information:

Looking at the LSPDU in the diagram above R4 is advertising….

- The 1.1.1.4/32 Prefix

- An Index of 4

- An SRGB Base of 17000

- A Range of 2000

- An unprotected IPv4 Adj-SID for each of its neighbors (R3 and R5) – other Adj-SIDs have been left out for simplicity

Now let’s consider how R2 installs entries into its LFIB for 1.1.1.4/32. R2’s best path to reach 1.1.1.4/32 is via R3 so it takes the information from both R4s LSPDU and R3s LDSPU…

In Label

R2’s In label for 1.1.1.4/32 is 17000 (its own SRGB Base) plus 4 (the Index from R4.00-00) = 17004.

Out Label

R2’s Out label for 1.1.1.4/32 is 17000 (R3’s SRGB Base which it would have got from R3.00-00) plus 4 (the Index from R4.00-00) = 17004.

You can very quickly see that if every device in the network has 17000-18999 as its SRGB, then the label will remain the same as traffic is forwarded through the network, because the In and Out labels will be the same!

From here we can illustrate how SR is a source routing paradigm. Let us assume that in our test network traffic comes into R1 and is destined for R5. However for some reason, we have a desire to send traffic over the R4-R5 link even though it is not the best IGP path. R1 can do this by stacking instructions (in the form of labels) onto the packet that other routers can interpret.

Here’s how it works:

R1 has added {17004} as the top label and {16345} as the bottom of stack label. Don’t worry about how the label stack derived. There are multiple policies and protocols that can facilitate this – but that is another blog in and of itself!

If you follow the packet through the network you can see that R2 is essentially doing a swap operation to the same label. R3 is then performing standard PHP before forwarding the packet to R4 (PHP works slightly differently in Segment Routing – I won’t detail it here but for our scenario it operates the same as in LDP). R4 sees the instruction of {16345} which tells it to pop the label and send it out of the interface for its adjacency to R5 (regardless of its best path).

This illustrates a number of advantages for SR:

• The source router is doing the steering.

• There is no need to keep state. In a traditional IGP+LDP network this kind of steering is typically achieved using MPLS TE and involves RSVP signalling to the head end router and back. With SR the source simply instantiates the label stack and forwards the packet.

• No need to run LDP. You’ve now one less protocol to run and no more LDP/IGP Sync issues.

• IPv6 label forwarding: IPv6 LDP never really took off. Cisco IOS-XR routers are able to advertise node-SIDs for both IPv4 and IPv6 prefixes. This can eliminate the need for technologies like 6PE.

Before moving on I’ll briefly show how, for example, SR on R4 would be configured for IOS-XR:

segment-routing

global-block 17000 23999 <<< the SRGB Base and range are auto calculated based on this

!

interface Loopback0

ipv4 address 1.1.1.4 255.255.255.255

!

router isis LAB

is-type level-2-only

net 49.0100.1111.1111.0004.00

address-family ipv4 unicast

metric-style wide

segment-routing mpls sr-prefer <<< sr-prefer is needed if LDP is also running

!

address-family ipv6 unicast

metric-style wide

segment-routing mpls sr-prefer

!

interface Loopback0

passive

address-family ipv4 unicast

prefix-sid absolute 17004 <<< configured as absolute values but broken up in the TLV

!

!

interface GigabitEthernet0/0/0/0

point-to-point

address-family ipv4 unicast

!

interface GigabitEthernet0/0/0/1

point-to-point

address-family ipv4 unicast

!

!

!There are more types of SIDs and many more applications for Segment Routing than I have shown here – but if you’re new to SR, this brief summary should be enough to help you understand TI-LFA. With that said, let’s look at how LFA and its different flavours work…

LFA Introduction

Loop Free Alternate is a Fast Reroute (FRR) technology that basically prepares a backup path ahead of a network failure. In the event of a failure, the traffic can be forwarded into the backup path immediately (the typical goal is sub 50ms) with minimal downtime. It can be thought of as roughly analogous to EIGRPs feasible successor.

The best way to learn is by example. So what I’ll do is walk through Classic-LFA, Remote-LFA and TI-LFA showing how each improves on the last. First, however, I’ll introduce some terminology before we get to the actual topologies:

• PLR (Point of Local Repair) – The router doing the protection. This is the router that will watch for outages on its protected elements and initiate FRR behaviour if needed.

• Release Point – The point in the network where, with respect to the destination prefix, the failure of the Protect Element makes no difference. It’s the job of the PLR to get traffic to the Release Point.

• C, Protected Element – The part of the network being protected against failure. LFA can provide Link Protection, Node Protection or SRLG (Shared Risk Link Group) protection. This post only covers Link Protection, but the principle is the same.

• D, Destination prefix – LFA is done with respect to a destination. So when presenting formulas and diagrams, D, will refer to the destination prefix.

• N, Neighbor – A neighbor connected to the PLR that (in the case of Classic-LFA) is a possible Release Point for an FRR solution.

• Post-convergence path – Refers to the best path to the destination, after the network has converged around the failure.

If a failure occurs at a given PLR router, it does the following:

- Assumes that the rest of the network is NOT aware of the failure yet – i.e. all the other routers think the link is still up and their CEF entries reflect that.

- Asks itself where it can send traffic such that it will not loop back or try cross the Protected Element that just went down – or to put it another way, where is the Release Point?

- If it has a directly connected Neighbor that satisfies the previous point, then send it to that Neighbor. Nice and easy, job done. This is Classic-LFA.

- If, however, the Release Point is not directly connected, traffic will need to be tunnelled to it somehow – this is R-LFA and TI-LFA.

Now that we’ve introduced the basic mechanism, let’s start with Classic-LFA.

Classic-LFA

Here is our starting topology:

The link we’re looking to protect is the link between R6 and R7. The prefix we will be protecting is the loopback of R3 (10.1.1.3/32). Traffic will be coming from R8. This makes R7 the PLR. We haven’t implemented SR yet so we’re just working with a standard IGP + LDP model.

Download the Classic-LFA configs here.

So, if you are protecting a single link Classic-LFA (also called local-LFA) can help. The rule for Classic-LFA is this (where Dist(x,y) is the IGP cost from x to y before the failure):

Dist(N, D) < Dist (PLR, N) + Dist (PLR, D)

If the cost for the Neighbor to reach the Destination is less than the cost of the PLR to reach the Neighbor plus the cost of the PLR to get to the Destination, the Neighbor is a valid Release Point. In short, this means that traffic won’t loop back or try to use the Protected Element.

Here’s the idea using our topology:

Prior to the failure traffic takes the R8-R7-R6-R2-R3 path.

When the R6-R7 link fails R7 must figure out where to send the traffic.

It can’t send it to R11. Remember R11 isn’t aware of the outage yet and it’s best path to R3 is back via R7 so it will simply loop it back. Or to put this in the formula:

Dist(N, D) < Dist (PLR, N) + Dist (PLR, D)

Dist(R11, R3) < Dist (R7, R11) + Dist (R7, R3)

40 < 10 + 30 FALSE!

But it can send it to R2! R2’s best path to R3’s loopback doesn’t cross the R6-R7 link:

Dist(N, D) < Dist (PLR, N) + Dist (PLR, D)

Dist(R2, R3) < Dist (R7, R2) + Dist (R7, R3)

10 < 100 + 30 TRUE!

So R2 is the valid Release Point and if R6-R7 fails R7 can forward traffic immediately over to R2.

Configuration and Verification of Classic-LFA

The below IOS-XR config shows the basic IS-IS config from R7’s point of view (I’ve left out the config for IPv6 for brevity, but all attached configs and the downloadable lab contain IPv6).

! Standard traceroute showing normal traffic flow

RP/0/RP0/CPU0:R8#traceroute 10.1.1.3 source loopback 0

Wed Jul 21 18:56:04.316 UTC

Type escape sequence to abort.

Tracing the route to 10.1.1.3

1 10.7.8.7 [MPLS: Label 16723 Exp 0] 64 msec 48 msec 53 msec

2 10.6.7.6 [MPLS: Label 16619 Exp 0] 50 msec 52 msec 52 msec

3 10.2.6.2 [MPLS: Label 16212 Exp 0] 56 msec 52 msec 52 msec

4 10.2.3.3 52 msec * 51 msec

RP/0/RP0/CPU0:R8#

! Configuration

hostname R7

!

router isis LAB

is-type level-2-only

net 49.0100.1111.1111.0007.00

log adjacency changes

address-family ipv4 unicast

metric-style wide

advertise passive-only

mpls ldp auto-config

!

interface Loopback0

passive

address-family ipv4 unicast

!

!

! This is the link to R6

interface GigabitEthernet0/0/0/1

point-to-point

address-family ipv4 unicast

fast-reroute per-prefix level 2 << enable Classic LFA

mpls ldp sync

!

!

<other interfaces omitted for brevity>

! Verifying LFA path via link to R2 with overall metric of 110

P/0/RP0/CPU0:R7#show isis fast-reroute 10.1.1.3/32

Wed Jul 21 18:56:24.064 UTC

L2 10.1.1.3/32 [30/115]

via 10.6.7.6, GigabitEthernet0/0/0/1, R6, Weight: 0

Backup path: LFA, via 10.2.7.2, GigabitEthernet0/0/0/4, R2, Weight: 0, Metric: 110

RP/0/RP0/CPU0:R7#

RP/0/RP0/CPU0:R7#show cef 10.1.1.3/32

Wed Jul 21 18:56:40.664 UTC

10.1.1.3/32, version 314, internal 0x1000001 0x0 (ptr 0xe1bd080) [1], 0x0 (0xe380928), 0xa28 (0xeddf9f0)

Updated Jul 21 18:55:44.495

remote adjacency to GigabitEthernet0/0/0/1

Prefix Len 32, traffic index 0, precedence n/a, priority 3

via 10.2.7.2/32, GigabitEthernet0/0/0/4, 5 dependencies, weight 0, class 0, backup (Local-LFA) [flags 0x300]

path-idx 0 NHID 0x0 [0xecbd890 0x0]

next hop 10.2.7.2/32

remote adjacency

local label 16723 labels imposed {16212} << R2's label for R3

via 10.6.7.6/32, GigabitEthernet0/0/0/1, 5 dependencies, weight 0, class 0, protected [flags 0x400]

path-idx 1 bkup-idx 0 NHID 0x0 [0xed68270 0x0]

next hop 10.6.7.6/32

local label 16723 labels imposed {16619}

RP/0/RP0/CPU0:R7#

RP/0/RP0/CPU0:R7#sh ip route 10.1.1.3/32

Wed Jul 21 18:56:52.408 UTC

Routing entry for 10.1.1.3/32

Known via "isis LAB", distance 115, metric 30, type level-2

Installed Jul 21 18:55:44.473 for 00:01:08

Routing Descriptor Blocks

10.2.7.2, from 10.1.1.3, via GigabitEthernet0/0/0/4, Backup (Local-LFA) << Classic-LFA

Route metric is 110

10.6.7.6, from 10.1.1.3, via GigabitEthernet0/0/0/1, Protected

Route metric is 30

No advertising protos.

RP/0/RP0/CPU0:R7#Shortcomings of Classic-LFA

There are two common shortcomings with Classic-LFA:

• The backup path is sub-optimal. The cost for R7 to reach R3 is now R7-R2-R3 = 110. It would be more efficient to go R7-R11-R10-R9-R6-R2-R3 = 70. This is indeed the Post Convergence Path shown in the diagram above.

• Coverage is not 100%. If the link to R2 was not present, there would be no Classic-LFA backup path, since nothing satisfies the formula. If there’s no directly connected neighbor that satisfied the formula, nothing can be done!

Remote-LFA can help with some of these problems. To demonstrate this let’s remove the R2-R7 link…

Remote-LFA

Our topology now looks like this:

You can see there is no Classic-LFA path here if link C goes down. But there obviously is a backup path (namely R11-R10-R9-R6-R2-R3), but if all we had was Classic-LFA we’d have to wait for the IGP to coverage, which in most networks is too long. R-LFA can step in and help but in order to explain how it does so, we first need to define a couple of terms to describe the network from the point of view of the PLR. P and Q space…

P and Q Space

The P-space and the Q-space are a collection of nodes within the network that have a specific relationship to the PLR or Destination, with respect to the Protected Element. This sounds complicated but I’ll walk through it. P and Q don’t stand for anything – they’re just arbitrary letters. Let’s start with the P-space…

P-space: In our context, the definition of P-space is “The set of nodes such that, the shortest-path from the PLR to them does not cross the Protected Element.”

This basically represents the set of devices such that R7’s best path to them, doesn’t cross the R6-R7 link.

So to figure this out…

Start at R7 and for every other router in the network figure out R7’s best path to reach it.

Does its best path cross the R6-R7 link? (this includes all ECMP paths too!)

• yes? – then it is not the in the P-space

• no? – this it is in the P-space

In our network the P-space contains these routers:

Note that even though R7 could reach R10 via R11 with a total cost of 30, it also has an ECMP cost 30 path via R9-R6-R7, which disqualifies it.

So that’s the P-space, but what about the Q-space…?

Q-space: The formal definition of Q-space is “the set of nodes such that their shortest-path to the Destination does not cross the Protected Element.”

This basically represents the set of devices that can get to R3 (the Destination) without worrying about whether or not the R6-R7 link has failed. They are basically on the other side of the failure with respect to the Destination – or in other words, they are candidate Release Points.

So to figure this out…

Go to each router in the network and figure out its best path to reach R3.

Does its best path cross the R6-R7 link? (again, this includes all ECMP paths too!)

• yes? – then it is not in the Q-space

• no? – this it is in the Q-space

So in our network the Q-space contains these routers:

What we want is a place where P and Q overlap. If we can get it to that router we can avoid the downed Protected Element and get the traffic to the Destination.

But in our setup they don’t overlap!

However, we can use something called the extended P-space to increase our reach. So what is the extended P-space?

Think about the network from R7s point of view. R7 can’t control what other routers do once it sends a packet on its way. But it can decide which of its interfaces it sends the packet out of. This allows us to consider not just our own P-space, but also the P-space of any directly connected Neighbors that exist in our own P-space. Adding all these together forms what we call the extended P-space.

In short, the extended-P space from the point of view of any node (in our case R7), is its own P-space + the P-space of all of its directly connected P-space Neighbors.

So for R7, we calculate the P-space for R4, R11 and R8 (we don’t calculate the P-space of R6, since R6 is not in R7’s P-space).

The P-space for R4 and R8 are identical:

The P-space of R11 is a little bigger:

Again, to reiterate how we calculate R11’s P-space, look at each device in the network and include it if R11’s best path to reach it doesn’t cross C.

If we combine these, we get our extended P-space:

Now if we combine the extended P-space and the Q-space we have an overlap at R10!

Any nodes in the overlap are called PQ nodes – these will be valid Release Points. If there is more than one, R7 will select the nearest. But how do we get the traffic there? If the R6-R7 link failed and the PLR simply sent traffic to R11 it would send it straight back (remember R11 isn’t aware of the failure yet). Here’s where R-LFA and its tunnelling kick in…

Remote-LFA Tunnelling

Download the R-LFA configs here.

Once the PQ node is found, the PLR will prepare a backup path whereby it puts the protected traffic in an LSP that ends on the PQ node. This is done by pushing a label on top of the stack.

But before it does that it must do the regular LDP swap operation for the original LSP to the Destination (R3). Under normal LDP conditions, it would swap the incoming label with the local label of its downstream neighbor (learned via LDP). But in this case the R7 doesn’t have an LDP session to the PQ node (R10)… so it builds a targeted one!

Over this targeted LDP session the PQ node will tell the PLR what its local label is for the destination prefix. It is this label that the PLR will swap the transport label for before it pushes on the label that will forward traffic to the PQ node.

To put this in diagram form for our example:

In our example, R10 tells R7 what its local LDP label is for R3 loopbacks. R7 swaps the transport label for this tLDP-learned label and then pushes R11’s label for R10 on top and forwards it to R11.

All of these tLDP sessions and calculations are done ahead of time. So that switching to the backup tunnel is as fast as possible.

Configuration and Verification for R-LFA

Here’s the IOS-XR configuration and verification output for R-LFA:

! Configuration

hostname R7

!

router isis LAB

is-type level-2-only

net 49.0100.1111.1111.0007.00

log adjacency changes

address-family ipv4 unicast

metric-style wide

advertise passive-only

mpls ldp auto-config

!

interface Loopback0

passive

address-family ipv4 unicast

!

!

interface GigabitEthernet0/0/0/0

point-to-point

address-family ipv4 unicast

fast-reroute per-prefix level 2

fast-reroute per-prefix remote-lfa tunnel mpls-ldp << Enable R-LFA

mpls ldp sync

!

<other interfaces left out for brevity>

! Verification of backup path

RP/0/RP0/CPU0:R7#show cef 10.1.1.3/32 detail

Wed Jul 21 19:07:22.598 UTC

10.1.1.3/32, version 387, internal 0x1000001 0x0 (ptr 0xe1bd080) [1], 0x0 (0xe380928), 0xa28 (0xeddfac8)

Updated Jul 21 19:05:46.659

remote adjacency to GigabitEthernet0/0/0/1

Prefix Len 32, traffic index 0, precedence n/a, priority 3

gateway array (0xe1e7da8) reference count 15, flags 0x500068, source lsd (5), 1 backups

[6 type 5 flags 0x8401 (0xe525560) ext 0x0 (0x0)]

LW-LDI[type=5, refc=3, ptr=0xe380928, sh-ldi=0xe525560]

gateway array update type-time 1 Jul 21 19:04:24.215

LDI Update time Jul 21 19:04:24.215

LW-LDI-TS Jul 21 19:04:38.931

via 10.7.11.11/32, GigabitEthernet0/0/0/3, 5 dependencies, weight 0, class 0, backup [flags 0x300]

path-idx 0 NHID 0x0 [0xecbd410 0x0]

next hop 10.7.11.11/32

remote adjacency

local label 16723 labels imposed {24112 24017} << 24112 is R11's label for R10 and 24017 is R10's label for R3

via 10.6.7.6/32, GigabitEthernet0/0/0/1, 5 dependencies, weight 0, class 0, protected [flags 0x400]

path-idx 1 bkup-idx 0 NHID 0x0 [0xed686d0 0x0]

next hop 10.6.7.6/32

local label 16723 labels imposed {16619}

Load distribution: 0 (refcount 6)

Hash OK Interface Address

0 Y GigabitEthernet0/0/0/1 remote

RP/0/RP0/CPU0:R7#

! Verification of R10s tLDP session and config

RP/0/RP0/CPU0:R10#sh mpls ldp neighbor 10.1.1.7:0

Peer LDP Identifier: 10.1.1.7:0

TCP connection: 10.1.1.7:646 - 10.1.1.10:44079

Graceful Restart: No

Session Holdtime: 180 sec

State: Oper; Msgs sent/rcvd: 18/18; Downstream-Unsolicited

Up time: 00:03:58

LDP Discovery Sources:

IPv4: (1)

Targeted Hello (10.1.1.10 -> 10.1.1.7, passive)

IPv6: (0)

Addresses bound to this peer:

IPv4: (5)

10.1.1.7 10.4.7.7 10.6.7.7 10.7.8.7

10.7.11.7

IPv6: (0)

RP/0/RP0/CPU0:R10#sh run mpls ldp

mpls ldp

address-family ipv4

discovery targeted-hello accept << this is needed to accept targeted LDP sessions

!

!

RP/0/RP0/CPU0:R10#So that’s R-LFA. It can help to reach a Release Point if it isn’t a directly connected neighbor. It’s also worth noting that R-LFA can use SR labels if they are available, rather than using tLDP.

Shortcomings of R-LFA

But there are shortcomings with R-LFA too:

• There is increased complexity with all of the tLDP sessions running everywhere.

• The backup path still might not be post-convergence path – meaning that traffic will be forwarding in a suboptimal manner while the network converges and then will need to switch to the new best path once convergence is complete.

• Coverage is still not 100% – there might not be a PQ overlap!

We’ll look at just such a case with no PQ overlap next…

TI-LFA and SR

First off, lets assume that we have removed LDP from the our network and configured SR instead. I won’t go through the process of turning LDP off and turning SR on beyond briefly showing this configuration:

segment-routing

global-block 17000 23999 << define the SRGB

!

router isis LAB

address-family ipv4 unicast

no mpls ldp auto-config << turn off LDP

metric-style wide

segment-routing mpls sr-prefer << enable SR

!

interface Loopback0

address-family ipv4 unicast

prefix-sid absolute 17001 << statically create the node SID

!

!

interface GigabitEthernet0/0/0/0

point-to-point

address-family ipv4 unicast

no mpls ldp sync << turn off LDP-IGP sync

!

!The downloadable lab for this blog has both SR and LDP configured but SR is preferred.

Now we’ll make another change to our topology by increasing the R9-R10 metric as follows:

This is a subtle change. But if we go through the process of calculating the P and Q spaces we get the following:

You can see there is no PQ overlap (this includes R7’s extended P-space). Let’s try to fix this using R-LFA…

We can’t do what we did last time and use R10 as the node to build our tLDP session to. If we tunnel traffic to R10, what label to we put at the bottom of the stack?

If we use R10’s local label for R3 it will forward the traffic straight back to R11 trying to use the R7-R6 link. This is precisely what it means to not be in the Q-space! It’s worth noting here that since we’re now using SR, R10’s local label for R3 with be the globally recognised 17003 label – but the problem will be the same either way, since R10s best path to R3 crosses the R7-R6 link.

Ok but what about R9…? If we try to tunnel traffic to R9, R7 will have to send traffic traffic to R11 with a top label of R11’s local label for R9 (again an SR label, namely 17009). But what will R11 do when it get’s this packet? It will send the traffic straight back to R7 (again trying to use the R7-R6 link) – if this wasn’t the case, R11 would be in the Q-space!

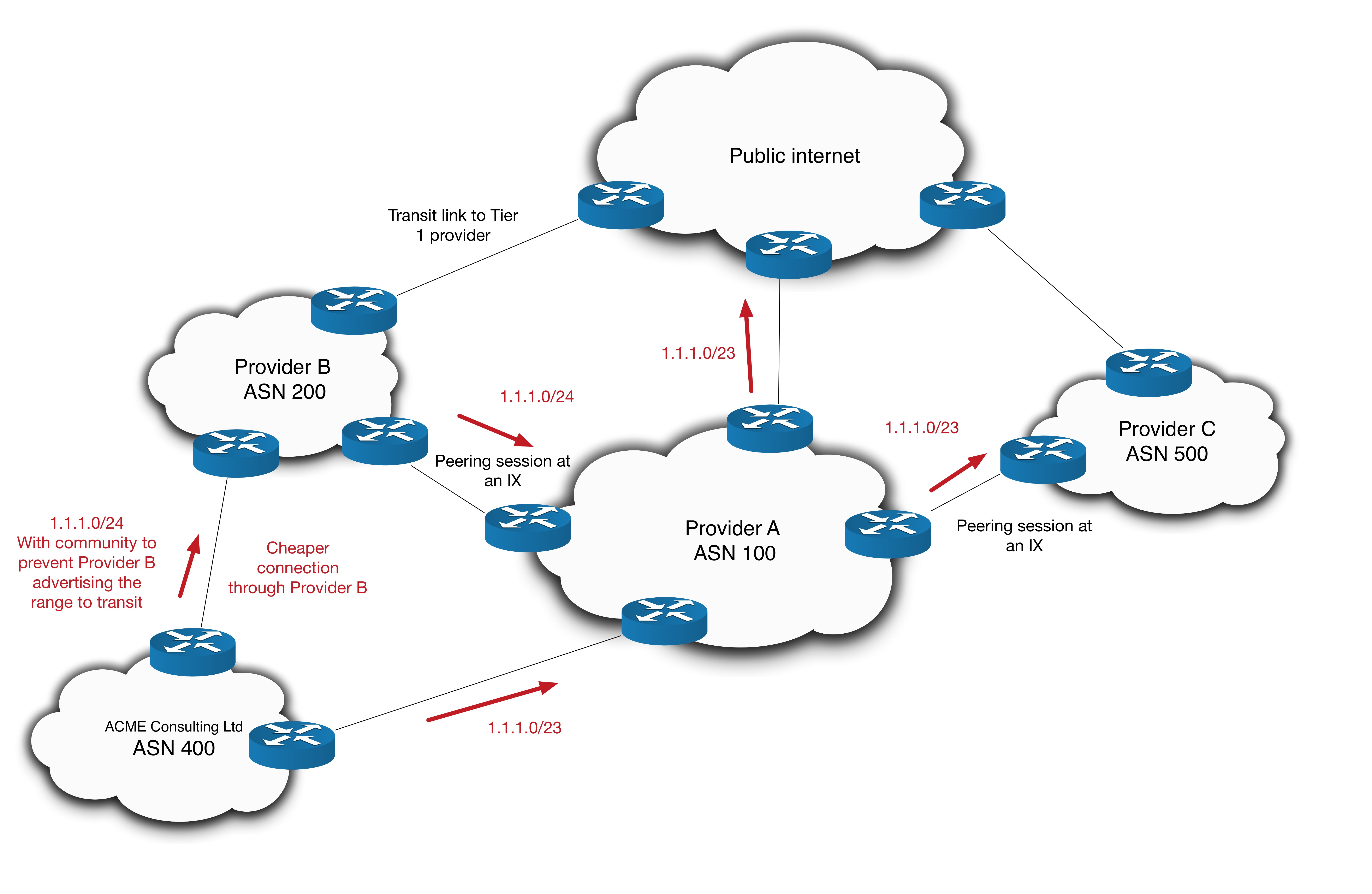

So what do we do? Well, here’s where the power of Segment Routing steps in. Topology Independent Loop Free Alternate (or TI-LFA) utilizes Adj-SIDs to bridge the P–Q space gap . Remember Adj-SIDs are locally generated labels communicated via the IGP TLVs that act as instructions to forward traffic to their local neighbors.

R7 does the following:

• Calculates what the best path to R3 would be if the link from R6-R7 were to go down (or in other words, it calculates the post-convergence path) – in this case it sees the best path is R7-R11-R10-R9-R6-R2-R3.

• Calculates the Segment List needed to forward traffic along this path – assuming other nodes in the network will not yet be aware of the R6-R7 failure.

• Installs this Segment List as the backup path.

The details of the algorithm used to calculate the Segment List is not publicised by Cisco, but in our case, the general principle is straight forward to grasp.

The top most label (or segment) gets the traffic to the border P node – R10.

The next label is the Adj-SID that R10 has for R9. It basically instructs R10 to pop the Adj-SID label and forward it out of its Adjacency to R9 – this is the P–Q bridging in action.

The bottom of stack label is simply the Node-SID for R3. When R9 gets it, we know it will forward it on to R3 without crossing the protected link, because it is in the Q-space.

To put this in diagram form, we get the following:

Once TI-LFA is enabled all of this is calculated and installed automatically. A key thing to highlight here is that the backup path is the same as the post-convergence path. This means that traffic will not have to change its path through the network again when the IGP converges. The only thing that will change is the label stack.

Configuration and Verification of TI-LFA

TI-LFA is pretty easy to configure and verification is straight forward when you know what to look for…

! Configuration

hostname R7

!

router isis LAB

is-type level-2-only

net 49.0100.1111.1111.0007.00

log adjacency changes

address-family ipv4 unicast

metric-style wide

advertise passive-only

mpls ldp auto-config

!

interface Loopback0

passive

address-family ipv4 unicast

!

!

interface GigabitEthernet0/0/0/0

point-to-point

address-family ipv4 unicast

fast-reroute per-prefix level 2

fast-reroute per-prefix ti-lfa level 2 << Enable TI-LFA

!

<other interfaces left out for brevity>

! Verification

RP/0/RP0/CPU0:R7#show isis fast-reroute 10.1.1.3/32 detail

Thu Jul 22 21:30:52.232 UTC

L2 10.1.1.3/32 [30/115] Label: 17003, medium priority

via 10.6.7.6, GigabitEthernet0/0/0/1, Label: 17003, R6, SRGB Base: 17000, Weight: 0

Backup path: TI-LFA (link), via 10.7.11.11, GigabitEthernet0/0/0/3 R11, SRGB Base: 17000, Weight: 0, Metric: 160

P node: R10.00 [10.1.1.10], Label: 17010 <<< P and Q nodes are specified

Q node: R9.00 [10.1.1.9], Label: 24001 <<< 24001 is R10s Adj-SID for R9

Prefix label: 17003

Backup-src: R3.00

P: No, TM: 160, LC: No, NP: No, D: No, SRLG: Yes

src R3.00-00, 10.1.1.3, prefix-SID index ImpNull, R:0 N:1 P:0 E:0 V:0 L:0,

Alg:0

RP/0/RP0/CPU0:R7#

RP/0/RP0/CPU0:R7#show cef 10.1.1.3/32 detail

Thu Jul 22 21:31:05.178 UTC

10.1.1.3/32, version 837, labeled SR, internal 0x1000001 0x83 (ptr 0xe1ba878) [1], 0x0 (0xe3800e8), 0xa28 (0xee59a38)

Updated May 23 15:22:46.988

remote adjacency to GigabitEthernet0/0/0/1

Prefix Len 32, traffic index 0, precedence n/a, priority 1

gateway array (0xe1e7838) reference count 15, flags 0x500068, source rib (7), 1 backups

[6 type 5 flags 0x8401 (0xe524e10) ext 0x0 (0x0)]

LW-LDI[type=5, refc=3, ptr=0xe3800e8, sh-ldi=0xe524e10]

gateway array update type-time 1 May 23 15:22:46.989

LDI Update time Jul 22 20:51:34.950

LW-LDI-TS Jul 22 20:51:34.951

via 10.7.11.11/32, GigabitEthernet0/0/0/3, 12 dependencies, weight 0, class 0, backup (TI-LFA) [flags 0xb00]

path-idx 0 NHID 0x0 [0xecbd770 0x0]

next hop 10.7.11.11/32, Repair Node(s): 10.1.1.10, 10.1.1.9 << PQ nodes called repair nodes

remote adjacency

local label 17003 labels imposed {17010 24001 17003}

via 10.6.7.6/32, GigabitEthernet0/0/0/1, 12 dependencies, weight 0, class 0, protected [flags 0x400]

path-idx 1 bkup-idx 0 NHID 0x0 [0xf082510 0x0]

next hop 10.6.7.6/32

local label 17003 labels imposed {17003}

Load distribution: 0 (refcount 6)

Hash OK Interface Address

0 Y GigabitEthernet0/0/0/1 remote

RP/0/RP0/CPU0:R7#Demonstration

To close off this blog I’ll give a packet capture demonstration of TI-LFA in action. In my EVE-NG lab environment, if I set a constant ping from R8 to R3 before shutting down the R6-R7 link, the IGP actually converges too fast for me capture any TI-LFA encapsulated packets. The fix this problem I updated the PLR as follows:

RP/0/RP0/CPU0:R7#conf t

Fri Aug 27 17:00:09.741 UTC

RP/0/RP0/CPU0:R7(config)#ipv4 unnumbered mpls traffic-eng Loopback0

RP/0/RP0/CPU0:R7(config)#!

RP/0/RP0/CPU0:R7(config)#mpls traffic-eng

RP/0/RP0/CPU0:R7(config-mpls-te)#!

RP/0/RP0/CPU0:R7(config-mpls-te)#router isis LAB

RP/0/RP0/CPU0:R7(config-isis)# address-family ipv4 unicast

RP/0/RP0/CPU0:R7(config-isis-af)# microloop avoidance segment-routing

RP/0/RP0/CPU0:R7(config-isis-af)# microloop avoidance rib-update-delay 10000

RP/0/RP0/CPU0:R7(config-isis-af)#commitI’m not going to go into detail on what microloop avoidance is here. But put briefly a microloop is, as the name suggests, is very short term routing loop caused by the fact that different routers will update their forwarding tables at different rates after a network change. Microloop avoidance is a mechanism that uses Segment Routing to detect and avoid such conditions. The main take away here though, is the rib-update-delay command. This instructs the router to hold the LFA path in the RIB for a certain period regardless of whether or not it could converge quicker. In our case we’re instructing R7 to keep forwarding traffic along the TI-LFA backup path after the R6-R7 failure for 10 seconds (10,000 milliseconds).

Once this was sorted, I started a packet capture on R11’s interface facing R7 and repeated the test…

RP/0/RP0/CPU0:R8#ping 10.1.1.3 source lo0 repeat 10000

Fri Aug 27 17:01:29.996 UTC

Type escape sequence to abort.

Sending 10000, 100-byte ICMP Echos to 10.1.1.3, timeout is 2 seconds:

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!.!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!...!!!!!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!.!!!!!!!!!!!!!!!!!!!!!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!.!!!!!!!!!!!!!!!!!

<output omitted>RP/0/RP0/CPU0:R7#conf t

Fri Aug 27 17:01:50.259 UTC

RP/0/RP0/CPU0:R7(config)#interface gigabitEthernet 0/0/0/1

RP/0/RP0/CPU0:R7(config-if)#shut

RP/0/RP0/CPU0:R7(config-if)#commit

Fri Aug 27 17:01:58.731 UTC

RP/0/RP0/CPU0:R7(config-if)#If we look at the PCAP on R11 we can see the correct labels are being used on the ICMP packets.

Checking R7 before the 10 seconds are up shows a backup TI-LFA tunnel in use:

RP/0/RP0/CPU0:R7#sh isis fast-reroute tunnel

Fri Aug 27 17:02:04.210 UTC

IS-IS LAB SRTE backup tunnels

tunnel-te32770, state up, type primary-uloop

Outgoing interface: GigabitEthernet0/0/0/3

Next hop: 10.7.11.11

Label stack: 17010, 24001

Prefix: 10.1.1.1/32 10.1.1.2/32 10.1.1.3/32 10.1.1.5/32 10.1.1.6/32 10.1.1.9/32

RP/0/RP0/CPU0:R7#The Prefix field shows all of the IGP destinations for which R7 will use this tunnel. Note that 10.1.1.3/32 is in that list. You can try this yourself in the downloadable lab.

Conclusions

So that’s TI-LFA using SR! I’ve tried to present this blog as a basic introduction to LFA types as well as a demonstration of how powerful SR can be. There are more nuanced scenarios involving a mix of SR-capable and LDP-only nodes, but LDP/SR interoperation is another topic entirely. We’ve seen how traditional technologies like Classic LFA and R-LFA are adequate in most circumstance but TI-LFA with the power of SR can provide complete coverage. Thank you for reading.

It’s not easy building GRE

The importance of having backup paths in a network isn’t a revelation to anyone. From HSRP on a humble pair of Cisco 887s to TI-LFA integration on an ASR9k, having a reliable backup path is a staple for all modern networks.

This quirk looks at the need for a backup path on a grand scale. We’ll look at a hypothetical scenario of a multi-national ISP losing a backup path to a whole region and how, as a rapid response solution, its builds a redundant path over a Transit Provider…

Scenario

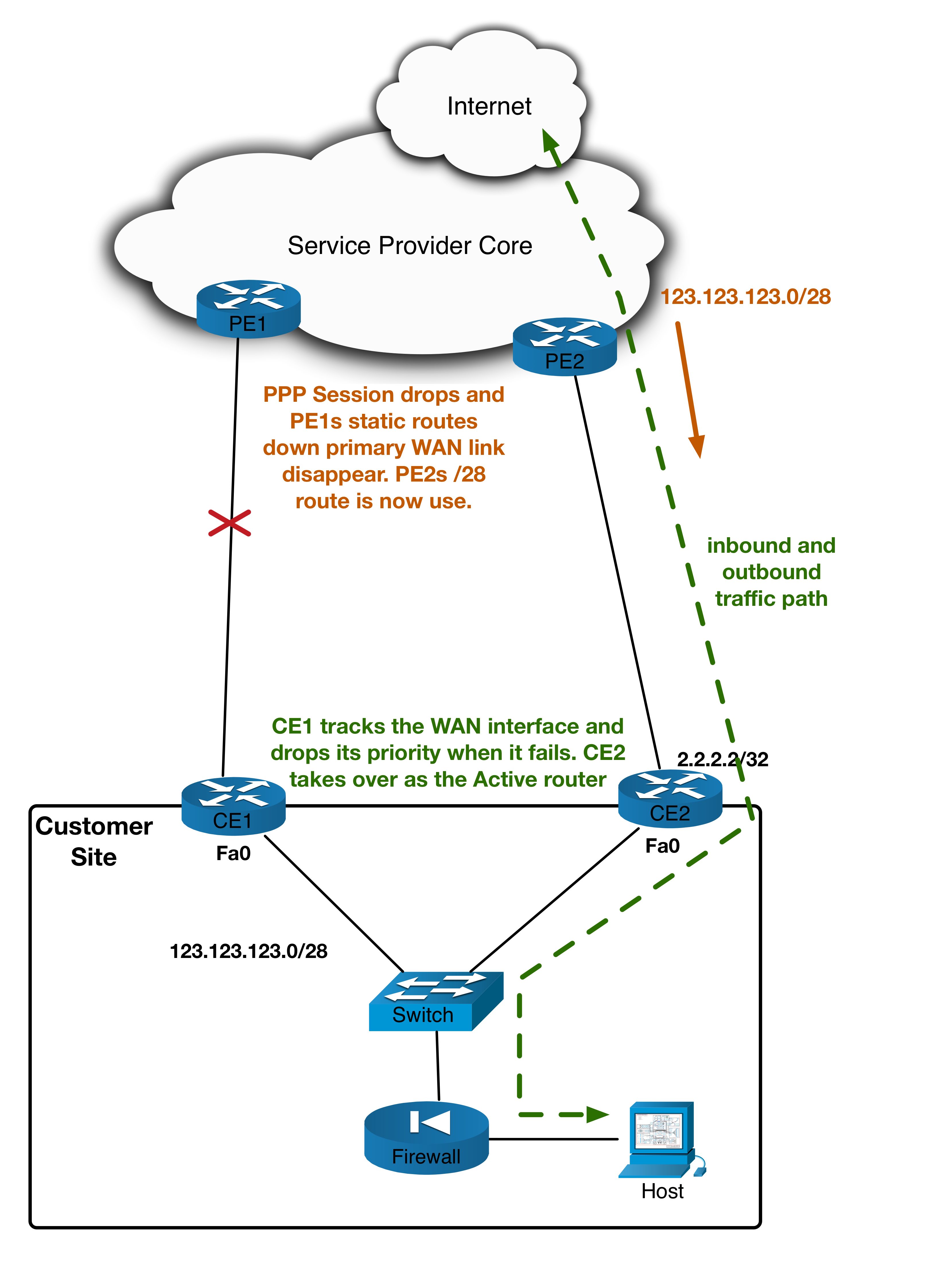

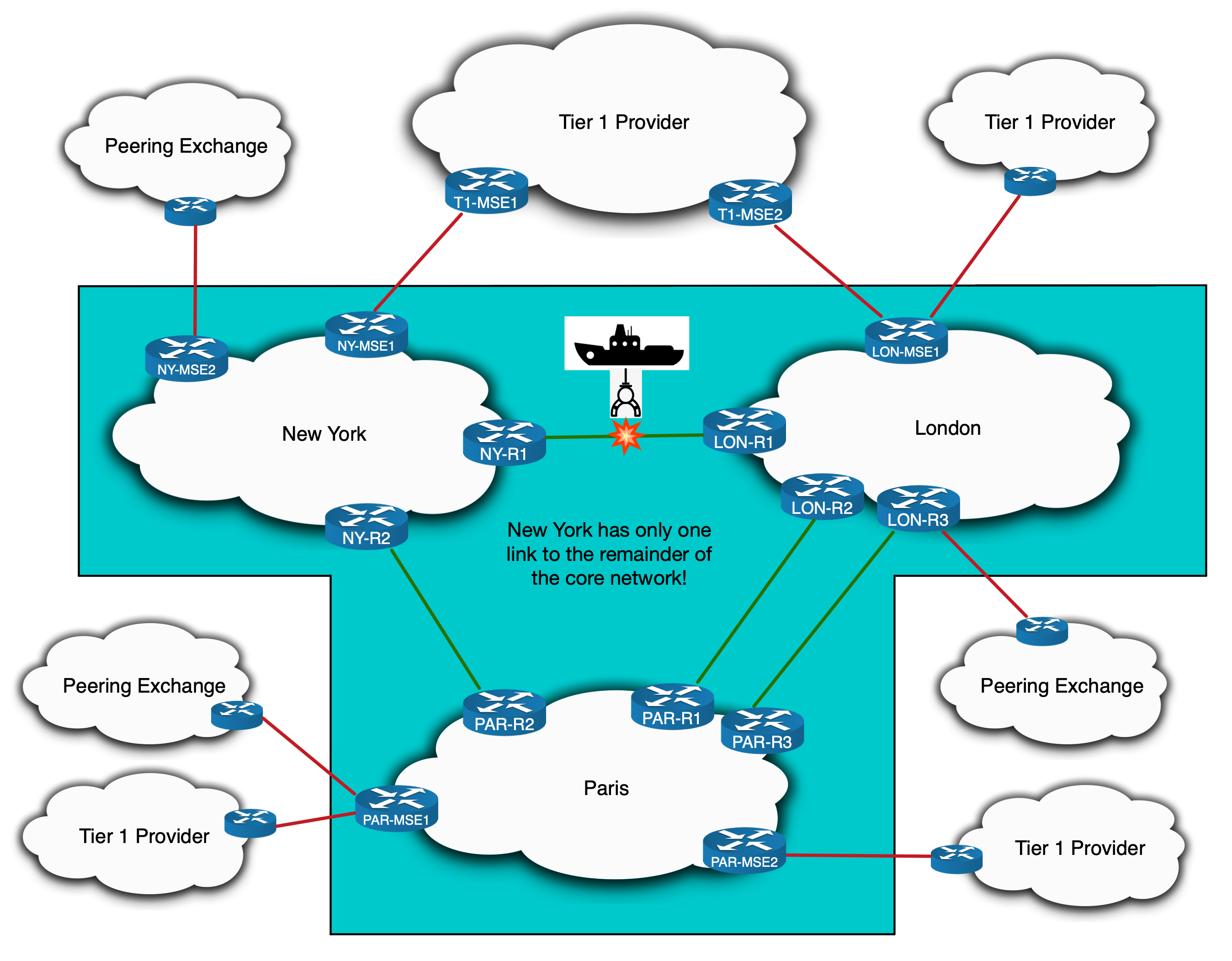

So here is our hypothetical Tier 2 Service Provider network. It is spread across three cities in three different countries and has various Peering and Transit connections throughout:

IS-IS and LDP is run internally. This includes the international links, resulting in one contiguous IGP domain. What’s important to note here is that New York only has a single link to the other countries and only has one Tier 1 Transit Provider.

The quirk

To setup this quirk, we need a link failure to take place. Let’s say a deep-sea dredger rips up the cable in the Atlantic going from New York to London.

New York doesn’t lose access – it still has a link to the rest of the network via Paris. However that link to Paris is now its sole connection to the rest of the network. In other words, New York no longer has that all important backup path. The situation is exacerbated when you learn that a repair boat won’t be sent to fix the undersea cable for weeks!

So what do you do?

You could invest in more fibre and undersea cabling to connect your infrastructure – arguably you should’ve already done this! But placing an order for a Layer 2 Service or contacting a Tier 1 Provider to setup CsC takes time. By all means, place the order. But in the meantime, you’ll need to set something up quickly in case New York to Paris fails and New York becomes completely isolated.

One option, and indeed the one we’ll explore in this blog, is to reconnect New York to London through your transit provider without waiting for an order or even involving them at all…

I should preface this by stating that this solution is neither scalable nor sustainable. But it is most definitely an interesting and … well… quirky work around that can be deployed at a pinch.

With that said, how do we actually do this?

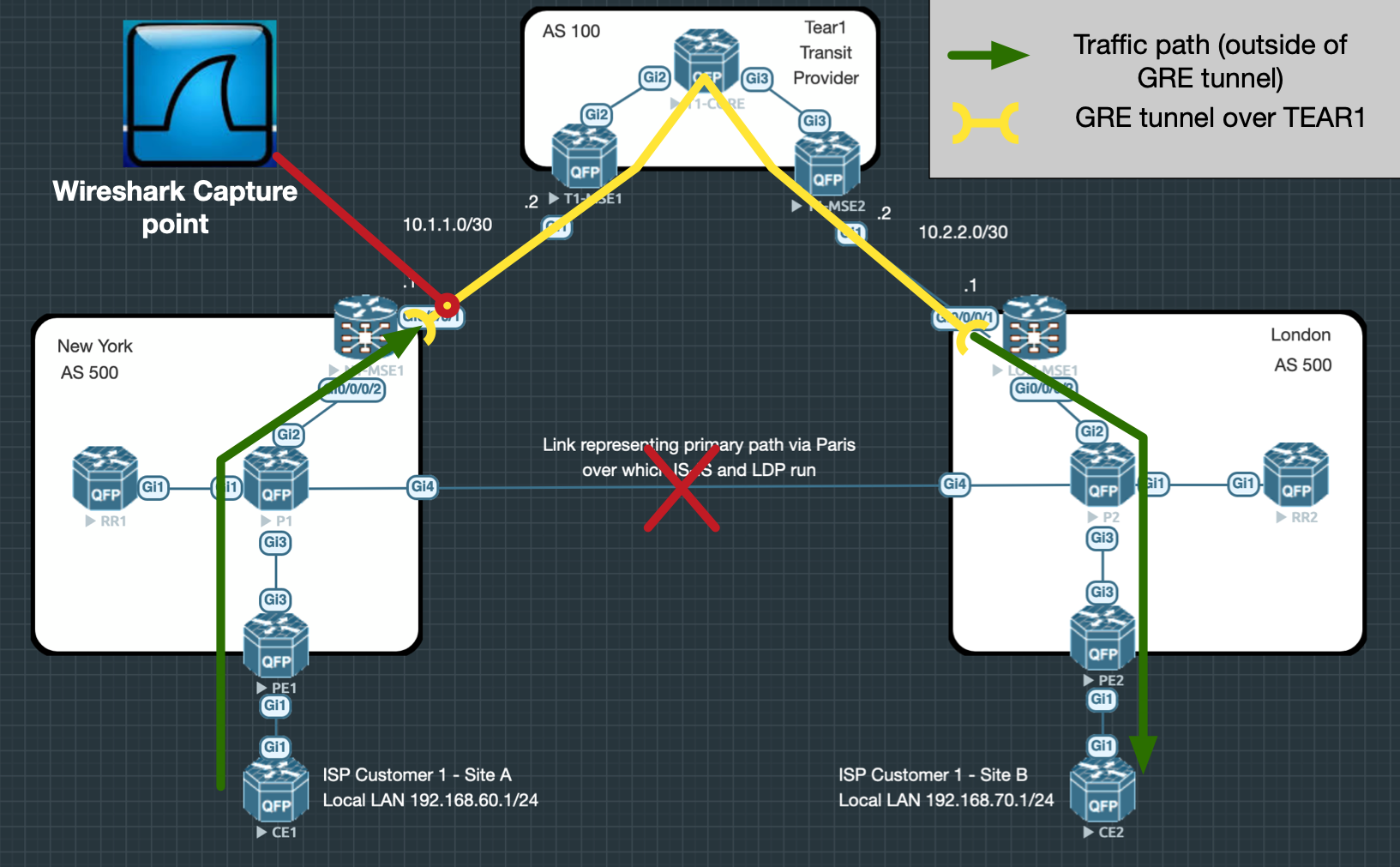

In order to connect New York to London over another network, we’ll need to implement tunnelling of some kind. Specifically, we’ll look at creating a GRE tunnel between the MSEs in New York and London using the Tier 1 Transit Provider as the underlay network.

To put in diagram form, our goal is to have something like this:

To guide us through this setup, I’ll tackle the process step by step using the following sections:

- Tunnel-end point Reachability

- Control Plane: The IPs of endpoints of the GRE tunnel will need reachability over the Tier 1 Transit Provider.

- Data Plane: Traffic between the endpoint must be able to flow. This section will examine what packet filtering might need adjusting.

- GRE tunnel configuration

- Control Plane: This covers the configuration and signalling of the GRE tunnel

- Data Plane: We’ll need to look at MTU and account for the additional overhead added by the GRE headers.

- Tunnel overlay protocols: Making sure IS-IS and LDP can be run over the GRE tunnel, including the proper transport addresses and metrics.

- Link Capacity: This new tunnel will need to be able to take the same amount of traffic that typically flows to and from New York. Given that our control over this is limited, we’ll assume that there is sufficient bandwidth on these links.

- RTBH: Any Remote Trigger Black Holing mechanisms that have been applied to your Transit ports may need to have exceptions made so it does not mistake your own traffic for a DDoS attack.

- Security: You could optionally encrypt the traffic transiting the Transit Providers network.

The goal of this quirk is to explore the routing and reachability side of the scenario so I will discuss the first 3 of the above points in detail and assume that link capacity, RTBH and security are already accounted for.

Downloadable Lab

This quirk considers the point of view of a large Service Provider with potentially hundreds of routers. However in order to demonstrate the configuration specifics and allow you to try the setup, I’ve built a small scale lab to emulate the solution. I’ve altered some of the output shown in this blog to make it appear more realistic. As such, the lab and output shown in this post don’t match each other verbatim. But I’ll turn to the lab towards the end in order to do a couple of traceroutes and for the most part the IP addressing and configuration match enough for you to follow along.

I built the lab in EVE-NG so it can be download as a zipped UNL file. I’ve also provided a zip file containing the configuration for each node, in case you’re using a different lab emulation program.

With that said, let’s take a look at how we’d set this up…

Tunnel-end point Reachability – Control Plane

We’ll start by putting some IP addressing on the topology:

(the addressing used throughout will be private, but in a real world scenario it would be public)

Now we might first try to build the tunnel directly between our routers, using 10.1.1.1 and 10.2.2.1 as the respective endpoints. But if we try to ping and trace from one to the other we see it fails:

RP/0/RSP0/CPU0:NY-MSE1#show route ipv4 10.2.2.1

Routing entry for 10.2.0.0/16

Known via "bgp 500", distance 20, metric 0

Tag 100, type external

Installed Jul 12 15:36:41.110 for 2w2d

Routing Descriptor Blocks

10.1.1.2, from 10.1.1.2, BGP external

Route metric is 0

No advertising protos.

RP/0/RSP0/CPU0:NY-MSE1#ping 10.2.2.1

Wed Jul 26 15:26:21.374 EST

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 10.2.2.1, timeout is 2 seconds:

.....

Success rate is 0 percent (0/5)

RP/0/RSP0/CPU0:NY-MSE1#traceroute 10.2.2.1

Type escape sequence to abort.

Tracing the route to 10.2.2.1

1 10.1.1.2 2 msec 1 msec 1 msec